Managing Embedded Devices with Cloud Orchestration

By 2025, 64% of organizations plan to operate in a multi-cloud environment (Nutanix, 2022), which will emphasize management through cloud orchestration. The Wind River solution, Wind River Studio Conductor, is a foundation for software-driven service delivery that helps control costs while enabling provisioning within minutes. This webinar will demonstrate how to control VxWorks target GPIOs from Studio Conductor using a Raspberry Pi 4 as the target board.

What Are Embedded Containers | Wind River

What Are

Embedded Containers?

Learn how containerization technology is bridging the gap between embedded and enterprise platforms to meet emerging IoT requirements.

What Are Embedded Containers?

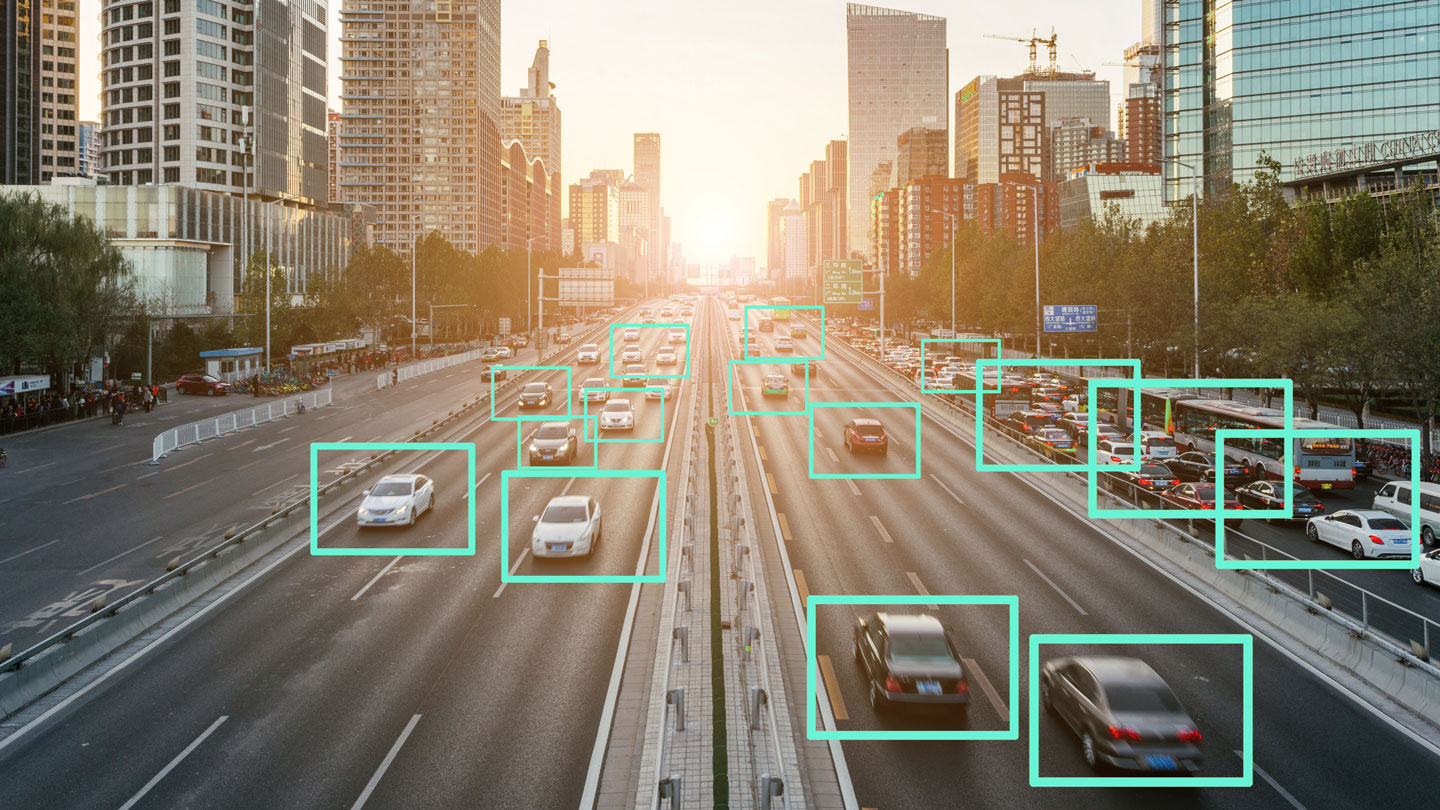

Container technology is fundamentally changing how systems are developed, tested, deployed, and managed. The main functions of embedded containers are to wrap up applications and services with all their dependencies and to isolate multiple software components running on the same hardware.

People are most familiar with containers as part of cloud-native architectures in which applications are decoupled from the infrastructure — including hardware and operating systems — on which they are running. The benefits of this approach include being able to automate the software pipeline to remove manual errors, standardize tools, and accelerate the rate of product iterations. With a CI/CD pipeline, companies can leverage continuous integration (CI), where code changes are merged in a central repository, with continuous delivery (CD), thereby enabling the automation of the entire software delivery process and the faster delivery of high-quality software.

Container technology is fundamentally changing how systems are developed and managed.

Embedded developers can also benefit from the infrastructure-agnostic, scalable execution environment enabled by containers. Imagine a design process — from development to test to deployment to production to management — in which developers can share resources, pipelines, and results across the team. Instead of being limited by the number of development boards available, companies could exploit the elasticity of the cloud to set up multiple instances of a system on demand.

The Avionics Example

Avionics systems offer a good example of the trend toward containerization. They have evolved from fundamentally hardware-based solutions to agile, highly upgradable, software-defined infrastructures, enabling systems to incorporate new technologies on the fly, without substantial hardware replacements. Software container technology promises to be an effective means of countering cybersecurity threats through quick updates and patches, delivering benefits to both the commercial and aerospace and defense sectors.

Bridging Embedded and Cloud-Native Technologies

In spite of the benefits of containers, traditional embedded application development and deployment does differ from cloud-native architecture in significant ways:

- It is tightly coupled to specific hardware.

- It is written in lower-level languages such as C/C++.

- It interacts directly with hardware (e.g., peripherals).

- It requires specialized development and management tools.

- It tends to have a long lifecycle and stateful execution.

- It faces an increasing diversity of end hardware and software deployed in the field.

To bridge container technology to the embedded world requires that embedded development adapt to a cloud native–inspired workflow, but in a way that maintains the requirements of applications, including real-time determinism, optimized memory footprint, an integrated tool chain for cross-compiling and linking, tools for security scanning and quality assurance, and the ability to secure the build environment.

Containers for Embedded Linux

Although Linux containers have been widely deployed in data centers and IT environments, without easy-to-use pre-integrated platforms or meaningful engagement across the ecosystem, container use has been scarce in small-footprint and long-lifecycle edge embedded systems in the OT (operational technology) realm. Embedded devices such as those for industrial, medical, and automotive systems often require lightweight, reliable software with long lifecycles. Existing container technologies and platforms, like those in enterprise Linux, are often bloated or require updates too frequently to run effectively on these embedded systems.

Gartner predicts that up to 15% of enterprise applications will run in a container environment by 2024, up from less than 5% in 2020.

Initially developed at Wind River® and available on GitHub, the container technology in Wind River Linux, dubbed OverC, integrates components from the Cloud Native Computing Foundation (CNCF) and the Yocto Project to help define a comprehensive framework for building and deploying containers for embedded systems. This technology supports virtually any processor architecture and orchestration environment, removing the difficulties and lowering the barrier of entry for container usage in embedded software projects for a diverse range of applications, including industrial control systems, autonomous vehicles, medical devices and equipment, IoT gateways, radio access network (RAN) products, and a wide range of network appliances.

Containers for Real-Time Operating Systems

Container technology is complex and built for the cloud, often inhibiting usage in embedded systems, and particularly in mission-critical applications on the edge, where real-time operating systems (RTOSes) are often required.

Yet, as the complexity of applications and their supporting infrastructures create new potential attack vectors for increasingly sophisticated hackers to exploit, containers in embedded systems offer a means to deliver responsive, secure application delivery to the intelligent edge. With these capabilities, aerospace and defense organizations, energy providers, large-scale manufacturers, and medical organizations can take advantage of low-latency, high-bandwidth performance for the most challenging applications.

To leverage the promise of containers for mission-critical applications, Wind River recently launched OCI-compliant container support for VxWorks®, its market-leading RTOS, making it possible to harness the same cloud infrastructure, tooling, and workflow that developers have used in familiar IT environments. This technological advance for RTOS applications has ushered in a new era of small-footprint embedded solutions that are robust enough for critical edge-computing applications in a variety of industry sectors.

The Open Container Initiative (OCI)

To drive and unify advances in container technology, Docker, CoreOS, and other leaders in the container technology field established two specifications: a runtime specification and an image specification. The runtime specification covers the unpacking of the downloaded file system bundle — the OCI image. The file system bundle is unpacked into an OCI runtime file system bundle to be run by the OCI runtime. VxWorks adheres fully to these OCI specifications. Tools and sample code are available through the OCI and GitHub repositories.

VxWorks with OCI Compliance

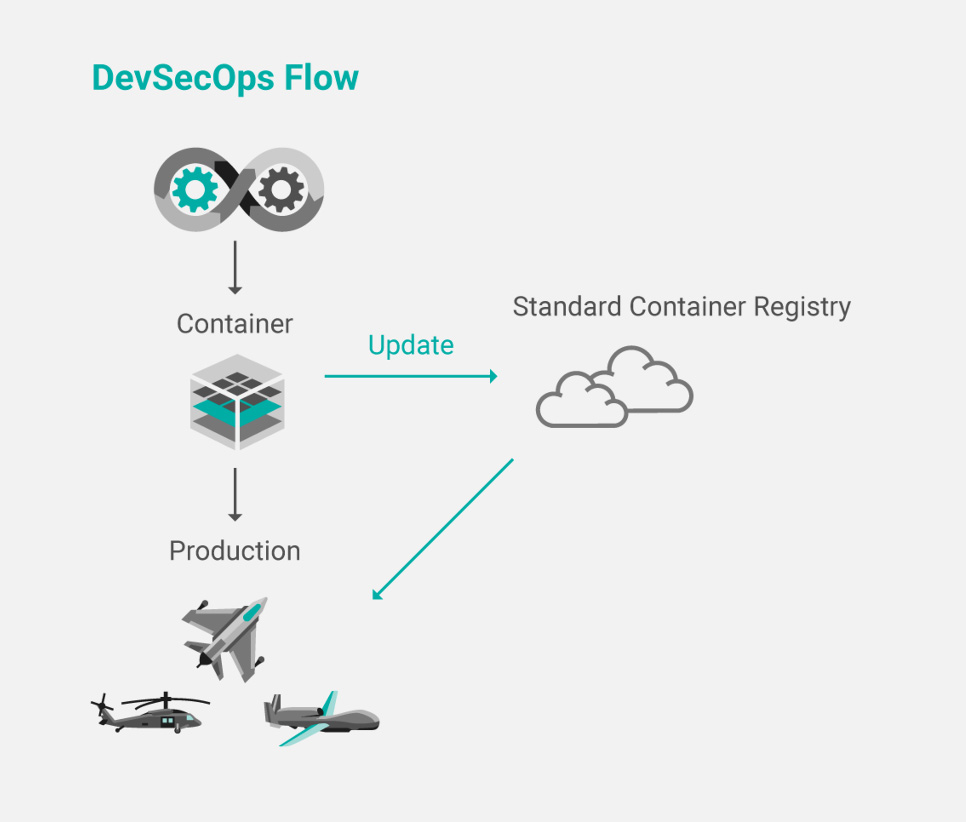

Figure 1 shows the flow of creating and maintaining VxWorks containers. In a typical DevSecOps environment involving VxWorks-based containers, application code is created using a best-practices security framework. The application is released as a container to a host that is the targeted endpoint, such as a VxWorks-based device, and to the container registry. Any updates or patches to the code can be pushed to the registry, stored, and then pulled from the registry as needed by the host system, whether an aircraft, a connected car, or an electrical substation — any vehicle, medical device, IoT installation, or manufacturing facility that is using containers for code distribution.

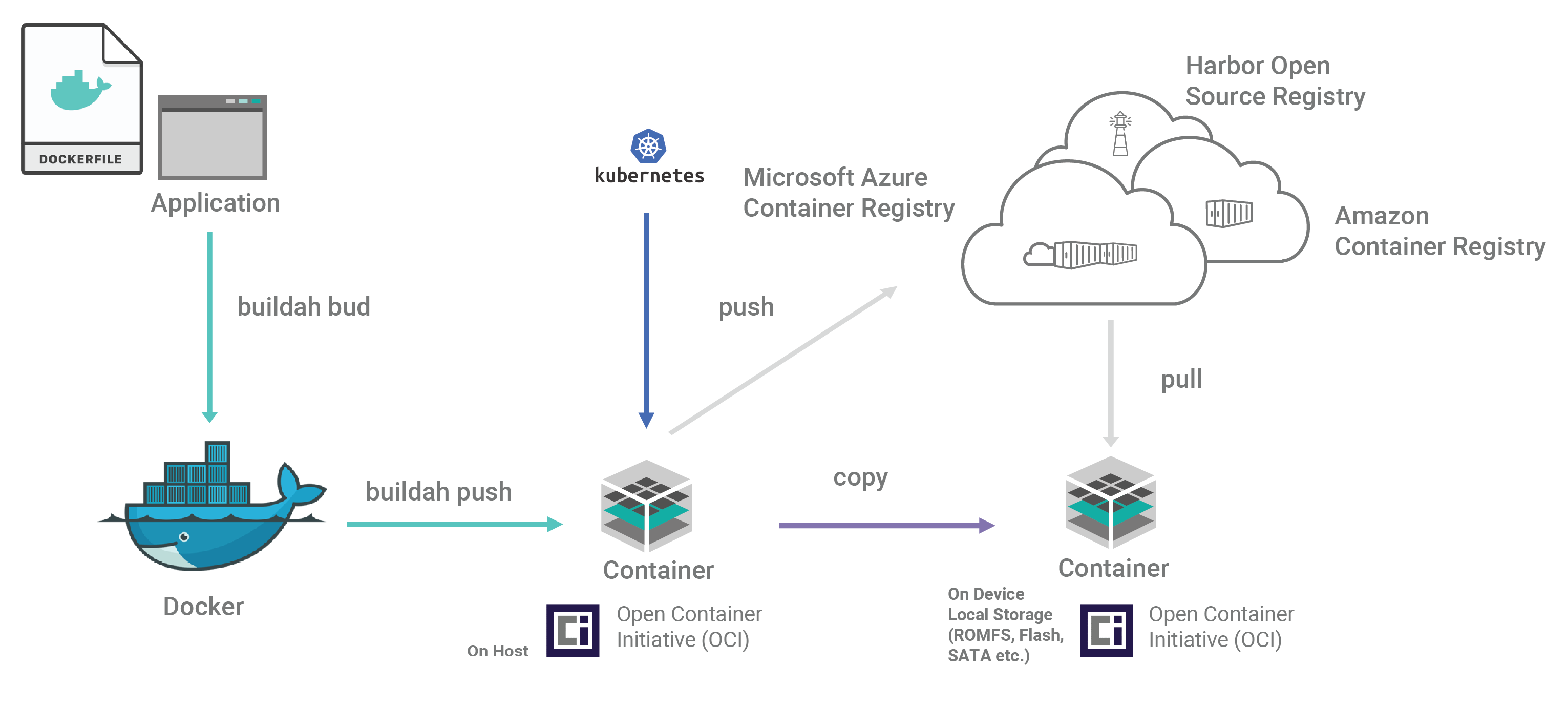

As Figure 2 shows, once application binaries have been developed, a standard Docker file is generated. An open source tool, Buildah, creates the container image, which is a packaged file bundle containing the components of the application. The container is then pushed to the container registry and copied to the targeted hosts as well. Kubernetes provides management and orchestration of the VxWorks OCI containers.

Figure 1: Production process for creating and updating VxWorks-based containers

The container registry itself — a Docker hub, Amazon ECR, Harbor, or any other OCI-compliant registry — is available for secure container access over the lifecycle of the application. Updates posted to the registry can be pulled automatically or manually by any legitimate host using the application — or set of applications — packaged in the container.

The process of distributing the containers can be handled in several different ways. For example, after landing, an aircraft can taxi to the maintenance area, connect to the airport service infrastructure, and pull any recently updated containers from the registry in the system server. The container updates will then be incorporated into the aircraft system. A vehicle equipped with modern wireless capabilities can drive past a 5G base station, receive a transmission with updates in a container, and then proceed to install those updates automatically, once the car is parked at home in the garage.

Because the technologies used in the VxWorks container implementation follow the OCI guidelines to the letter, developers know that containers they build will function reliably across infrastructures that are constructed according to the OCI standard. Proprietary solutions for software deployment, on the other hand, lack the agility and predictability of standards-based solutions and are generally unpopular in the industry for those reasons. In contrast, OCI-compliant tools — following both the image format specification and runtime specification — effectively span the ecosystem composed of container platforms and container engines, as well as standards-based cloud provider environments and on-premises infrastructures.

Figure 2: End-to-end workflow for creating and distributing containers

Container Security

If container technology is to become successful in environments that call for heightened security — such as aerospace and defense, automotive applications, energy grids and subsystems, robotics implementations, and so on — extra measures for hardening solutions are needed.

Cloud-native, open source registries typically provide a layer of security when using containers. For example, Harbor employs policies and role-based access control to secure container components. Each container image is scanned to ensure that it is free of known vulnerabilities and then signed as trusted before distribution. For sensitive, mission-critical deployments, Harbor provides a level of assurance when moving containers across cloud-native compute platforms.

Only 45% of global security tech leaders say their company has sufficient security policies and tools in place for use of containers.

Following DevSecOps software development best practices is one of the most effective means of protecting container security. The Department of Defense has published the Container Hardening Guide, which outlines DevSecOps processes that are important for guarding against security breaches.

How Can Wind River Help?

VxWorks

VxWorks is the first real-time operating system (RTOS) in the world to support application deployment through containers. The latest release of VxWorks includes support for OCI containers, enabling you to use traditional IT-like technologies to develop and deploy intelligent edge software better and faster, without compromising determinism and performance.

» Learn moreWind River Linux

Wind River Linux includes container technology that supports development and orchestration frameworks such as Docker and Kubernetes.

Our team brings decades of experience in hardening the security around embedded devices to protect them from cybersecurity threats.

It is Docker compatible under OCI specifications, but it is also lighter weight and has a smaller footprint than Docker, which is often a vital need for embedded systems. Delivering a Yocto Project Compatible cross-architecture container management framework, Wind River Linux helps ease and accelerate the use of containers for embedded developers.

» Learn moreWind River Helix Virtualization Platform

Wind River Helix™ Virtualization Platform enables heterogeneous systems employing a mix of OSes and requiring determinism and safety certification to leverage the scalability of containers while meeting the often stringent requirements of embedded systems.

» Learn moreEmbedded Containers FAQs

Compiling Faster and Safer Applications

Wind River Linux Tuitorial - Japan

Wind River Linuxは、組込み製品の限られたリソースを無駄なく効率的に活用し、高付加価値のアプリケーションを支えるLinuxディストリビューションを作成可能な商用組込みLinuxです。アプリケーションやミドルウェアの変更はもちろん、デバイスドライバやカーネルをチューニングすることもできるほか、カスタマイズされたハードウェア上で動作するLinuxディストリビューションを作成できることもWind River Linuxのメリットです。

予算やリードタイムの関係から、半導体製品の実機をすぐに入手することが難しい場合もあります。実際、みなさんも新しい実機がなかなか届かないなど、お困りになったことはあるのではないでしょうか?Wind River Linuxを使ってみよう!の第2回では、QEMUを使って実機なしでソースコードベースのWind River Linuxを動かす方法をご紹介します。ぜひご覧いただき、実際にWind River Linuxに触れてみてください。Wind River Linuxの詳細は、下記の「Wind River Linuxが選ばれる理由」をご参照ください。

ソースコードからLinuxディストリビューションを作成できる

Wind River Linuxのビルドシステム

Wind River Linux:Yocto Project互換の組込みLinuxビルドシステム

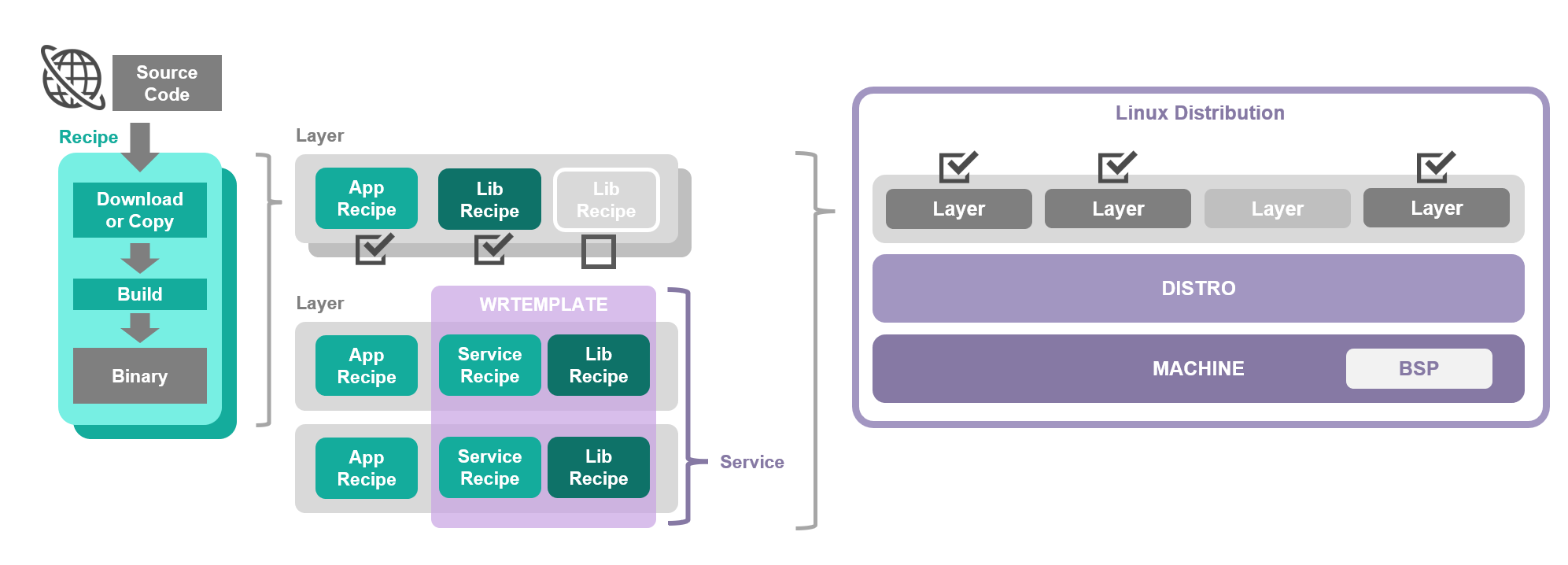

Wind River LinuxではLinuxディストリビューションを、Yocto Project互換のビルドシステムにより作成します。Yocto Projectのビルドシステムは、Recipe、Layer、DISTRO、MACHINEという設定項目により、作成するLinuxディストリビューションの構成を決定します。Wind River Linuxのビルドシステムはこれらに加えて、Yocto Projectよりも簡単にアプリケーションやミドルウェア、ライブラリを統合できるWRTEMPLATEという設定項目を有しています。それぞれの設定項目の役割を以下に示します。

- Recipe : ソースコードを収集してビルドする方法を定義する。

- Layer : Recipeを統合し、実行ファイルと関連するライブラリの組み合わせを定義する。

- WRTEMPLATE:高度なアプリケーションの構築をより円滑にするため、Layerをまたいで機能を統合するWind River Linux独自のカスタマイズ機能。アプリケーションに必要なライブラリだけでなく、連携するアプリケーションや関連するミドルウェアまで含めた構成を定義できる設定項目。WRTEMPLATEは"feature/*"と命名される。

- DISTRO : Linuxディストリビューションをカスタマイズする基となるLinuxディストリビューションのテンプレートを定義する。

- MACHINE : 個別のハードウェアに対応したBSPを定義する。

Recipe、Layer、WRTEMPLATE、DISTRO、MACHINEの関係図

Recipe

Recipeは最も細かな粒度の設定項目で、ソースコードからアプリケーションやライブラリといったコンポーネントをビルドする方法を定義します。Wind River Linuxのビルドシステムは、Recipeに記載されたURL、Gitリポジトリ、ローカルファイルシステムからソースコードを入手し、ターゲットデバイス用(例:Arm 64-bit用)にコンパイルします。

Layer

Layerは、Recipeによって生成されたアプリケーションとライブラリを統合し、アプリケーションを実行するために必要最小限となるソフトウェアのセットを定義します。例えば、Layerにはmeta-networkingやmeta-webserverなどがあります。依存関係のあるプログラムのRecipeを、Layerにより統合することで、開発者はライブラリの依存関係に頭を悩ませることなく、アプリケーションを簡単にターゲットデバイスへインストールすることができます。なお、Layerには多数のRecipeが含まれていますが、そのすべてがビルドされるわけでなく、デフォルトで有効なRecipeのみがビルドされ、インストールされます。デフォルトで無効なRecipeを含める場合は、設定ファイルに変更を加える必要があります。

WRTEMPLATE

WRTEMPLATEはWind River Linuxが独自に定義するコンフィグレーション機能です。WRTEMPLATEはLayerをまたぐ設定項目であり、アプリケーションとライブラリだけでなく、アプリケーションに依存する別のアプリケーションやミドルウェアまで含んだ構成を定義します。これにより、導入したい機能にあわせた機能名(下表はその一例)を設定ファイルに追記するだけで、複数のアプリケーションが連携する高度なシステムを、簡単に構築することができます。

| WRTEMPLATEの一例 | 概要 |

|---|---|

| feature/gdb | デバッグ機能 |

| feature/docker | コンテナ機能 |

| feature/ntp | 時刻同期機能 |

| feature/selinux | セキュリティ機能 |

| feature/xfce | GUI機能 |

DISTROとMACHINE

DISTROとMACHINEは、すべてのプログラムを実行するための土台を定義します。DISTROは、Linuxディストリビューションの雛形を定義します。プログラムの実行に不可欠なLinuxカーネルをはじめ、カーネルモジュールや基本的なライブラリが含まれています。MACHINEは、BSPを定義します。プログラムとターゲットデバイスのハードウェアを結合する役割を担います。

| DISTROの一例 | 概要 |

|---|---|

| wrlinux | Wind River Linux LTS |

| wrlinux-graphics | Wind River Linux Graphics LTS |

| wrlinux-tiny | Wind River Linux Tiny LTS |

| nodistro | OpenEmbedded |

| MACHINEの一例 | 概要 |

|---|---|

| bcm-2xxx-rpi4 | Raspberry Pi 4向けのBSP |

| qemuarm | QEMUで作成したArm 32-bit仮想マシン向けのBSP |

| qemuarm64 | QEMUで作成したArm 64-bit仮想マシン向けのBSP |

自作Linuxディストリビューションをビルドして実行する

動画:ソースコードでゼロからビルドするYoctoベースの組込みLinuxディストリビューション

※動画は LTS21 となっておりますが、他LTSバージョン も類似の手順で実行可能です。

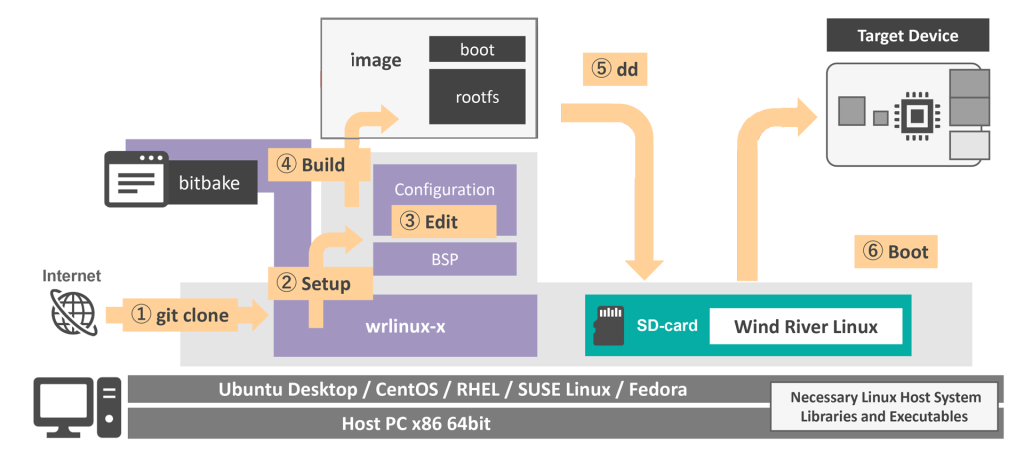

それでは、Wind River Linuxを使ってArm 64-bit向けのLinuxディストリビューションを作成し、実行してみましょう。実行するまでの手順は下図橙矢印の通りです。なお、今回も前回同様にQEMUにより作成した仮想Raspberry Pi 4上でLinuxディストリビューションを実行します。QEMUの概要については「ホストPCのみでWind River Linuxを実行する」をご参照ください。

- 開発/実行環境の準備

- 開発環境を構築する(下図:①)

- ターゲットデバイス用のビルドシステムを取得する(下図:②)

- Linuxディストリビューションをカスタマイズする(下図:③)

- Linuxディストリビューションをビルドする(下図:④、bitbakeによる)

- 仮想SDカードへデプロイする(下図:⑤):ただし本手順では④により自動的に実施される

- Linuxディストリビューションを実行する(下図:⑥)

ターゲットデバイス上でLinuxを実行するまでの手順

開発/実行環境の準備

Wind River Linuxを使用するには、LinuxをインストールしたホストPCが必要です。推奨されるホストPCの要件は「Wind River Linux Release Notes:Host System Recommendations and Requirements」をご確認ください。また、「Necessary Linux Host System Libraries and Executables」に掲載されているパッケージも事前にインストールしてください。今回の動画ではホストPCにUbuntu Desktop 20.04(x86 64bit)を選択しています。

開発環境を構築する

まず、開発環境を構築します。Wind River LinuxのWEBページにて「Wind River Linux を評価(ビルドシステム含む)」をクリックし、ダウンロードに必要な項目を入力後、使用許諾契約を確認し、送信をクリックしてください(右図)。

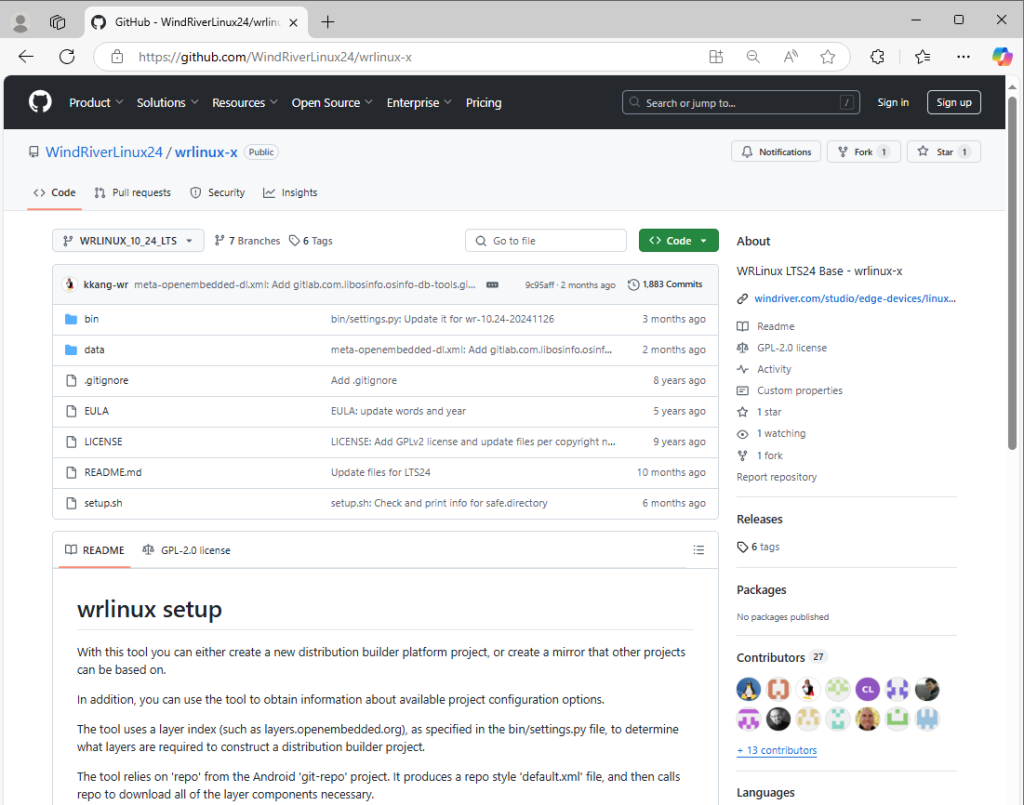

入力後、GitHubのURLがWEBサイト上に表示されます。このURLにアクセスするとGitHubページが開きますので、gitコマンドに指定するclone用のURLを取得してください(例右図)。clone用URLと、Wind River Linux LTSのブランチ名"WRLINUX_10_<バージョン>_LTS"を付与した、git cloneコマンドを実行し、開発環境を構築します。以下は、ホームディレクトリの中に作業用ディレクトリ"codebuild"を作成し、codebuild内に開発環境を構築する例です。

ホストPC上での操作

$ mkdir -p ~/codebuild

$ cd ~/codebuild

$ git clone --branch WRLINUX_10_<バージョン>_LTS \

<GitHubより取得したclone用URL>

ターゲットデバイス用のビルドシステムを取得する

最初に利用する開発ツールは"setup.sh"です。まずsetup.shに"--list-machines"オプションを指定し実行すると、Wind River Linuxが対応しているBSPのリストが表示されます。このリストの中からターゲットデバイスとするBSP名を指定して、ビルドシステムを構築します。今回のターゲットはQEMUで作成した仮想的なRaspberry Pi 4のため、BSPとして"bcm-2xxx-rpi4"を選択します。setup.shに"--machines bcm-2xxx-rpi4"オプションを指定し、コマンドを実行するとRaspberry Pi 4用のビルドシステムが完成します。

setup.shの詳細は「The setup.sh Tool」をご参照ください。

ホストPC上での操作

$ ./wrlinux-x/setup.sh --list-machines

<利用可能なBSPの一覧が表示される>

$ ./wrlinux-x/setup.sh --machines bcm-2xxx-rpi4

<Raspberry Pi 4に対応するBSPを入手する>

開発用ファイルを入手した後、". ./environment-setup-x86_64-wrlinuxsdk-linux"コマンドを実行してください。このコマンドにより、開発作業に必要なPATHがシステムに追加されます。続いて". ./oe-init-build-env <ディレクトリ名>"のコマンドを実行すると、Linuxディストリビューションをカスタマイズするための設定ファイルを含む、ビルド用ディレクトリが作成されます。以下の例では、qemubuildという名前のビルド用ディレクトリを作成しています。

ホストPC上での操作

$ . ./environment-setup-x86_64-wrlinuxsdk-linux

$ . ./oe-init-build-env qemubuild

$ pwd

/home/UserName/qemubuild/build

$ ls

conf

- qemubuild: ビルド作業行うためのディレクトリ

Linuxディストリビューションをカスタマイズする

最初に利用する開発ツールは"setup.sh"です。まずsetup.shに"--list-machines"オプションを指定し実行すると、Wind River Linuxが対応しているBSPのリストが表示されます。このリストの中からターゲットデバイスとするBSP名を指定して、ビルドシステムを構築します。今回のターゲットはQEMUで作成した仮想的なRaspberry Pi 4のため、BSPとして"bcm-2xxx-rpi4"を選択します。"setup.sh"に"--machines bcm-2xxx-rpi4"オプションを指定し、コマンドを実行するとRaspberry Pi 4用のビルドシステムが完成します。

ホストPC上での操作

$ vi ./conf/local.conf

BB_NO_NETWORK ?= '0'

MACHINE ?= " bcm-2xxx-rpi4"

Linuxディストリビューションをビルドする

Linuxディストリビューションのビルドには"bitbake"コマンドを利用します。bitbakeコマンドの引数には、標準のビルドを意味する"wrlinux-image-std"を指定します。また、コマンドのオプションとして"-c populate_sdk_ext"を指定します。このオプションにより、Linuxディストリビューション上で実行可能なアプリケーションを開発するためのSDKを生成することができます。bitbakeコマンドを実行すると約8000個のソースコードが次々にダウンロードされ、統合されます。完了までしばらくお待ちください。

ホストPC上での操作

$ bitbake wrlinux-image-std -c populate_sdk_ext

GitHub版ではパッケージをオープンソースコミュニティから収集するため、オープンソースコミュニティのパッケージ配布状況が変わるとビルドができなくなることがあります。Wind River Linuxの有償版を利用することにより、パッケージの収集先がウインドリバー社のサーバーとなるため、ウインドリバーが十分に検証した高品質なパッケージを、常時入手できるようになります。

ビルド完了後、エラーが発生していないか、ターミナルのメッセージをご確認ください。エラーが発生している場合は、エラー内容がビルド画面に赤字で表示されます。ビルド成功後、ビルドの成果物は下記のディレクトリへ格納されます。なお、QEMUで実行するイメージファイルもこのディレクトリに含まれています。そのため、QEMUで実行する場合、生成物をデプロイする作業は不要です。

ホストPC上での操作

$ ls tmp-glibc/deploy/images/bcm-2xxx-rpi4/ #生成されたLinuxディストリビューション

$ ls tmp-glibc/deploy/sdk/ #開発ツール一式

Linuxディストリビューションを実行する

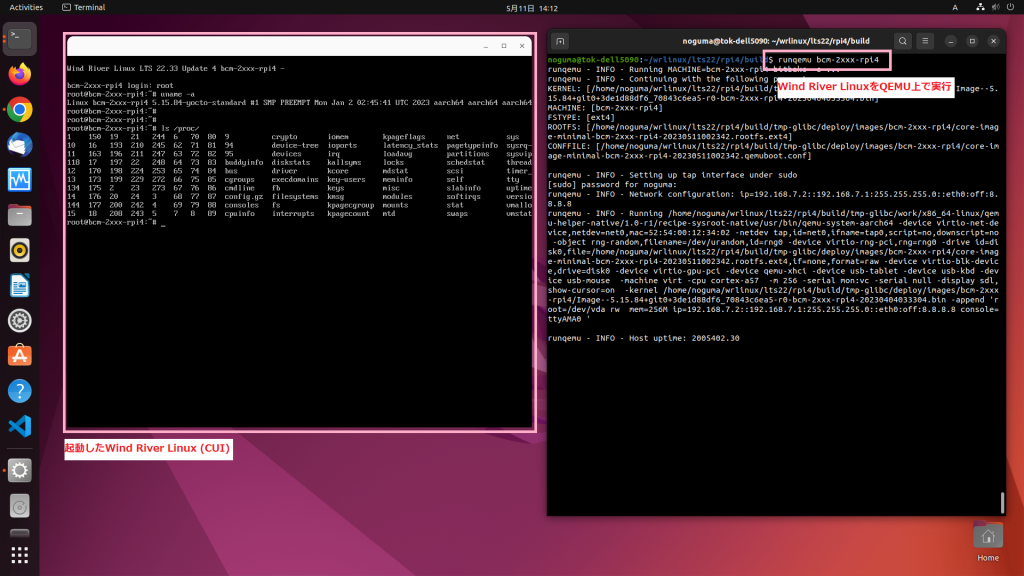

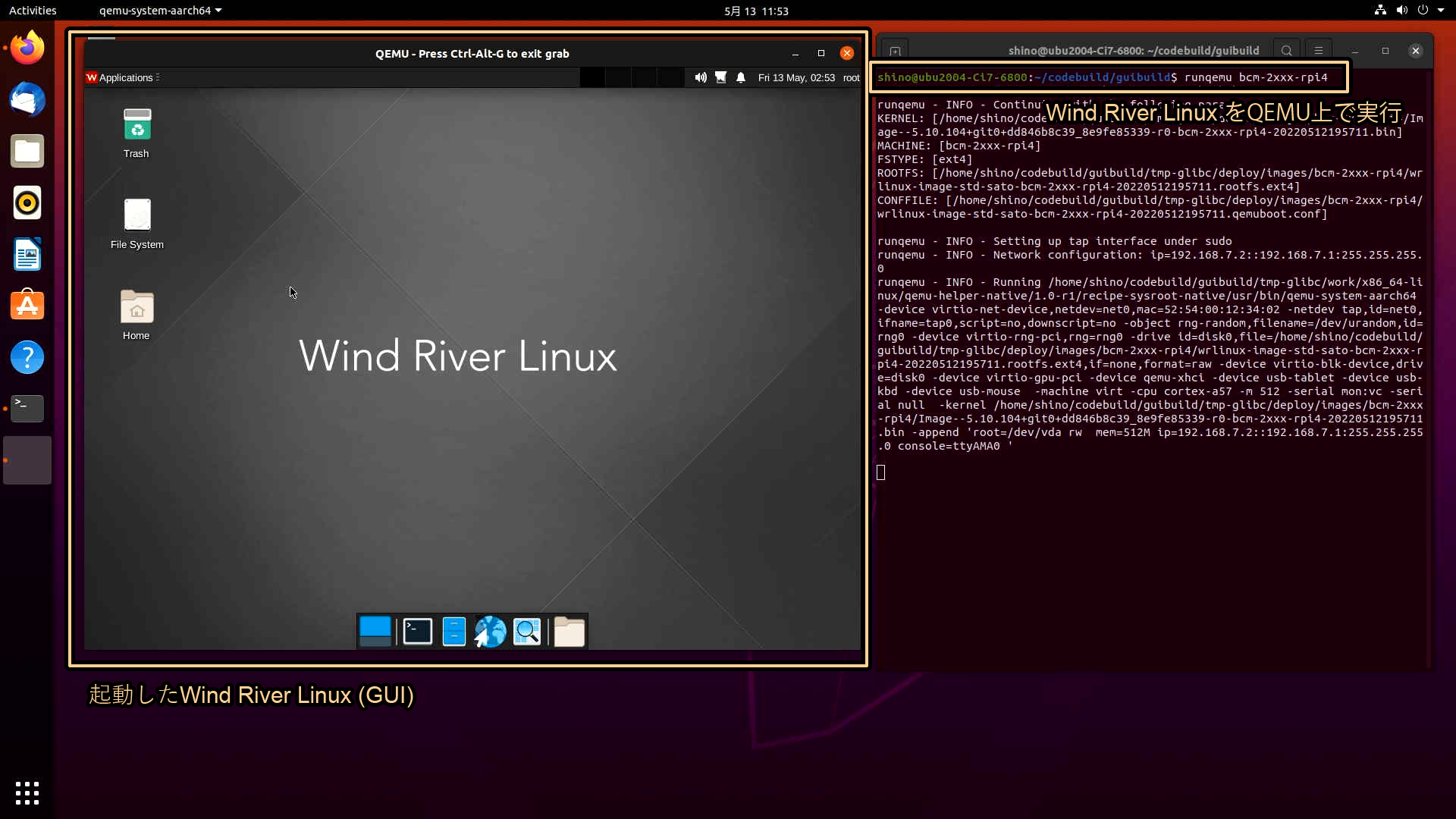

それではRaspberry Pi 4向けにビルドしたイメージをQEMUの仮想Raspberry Pi 4上で実行してみましょう。実行には"runqemu"コマンドを使います。オプションにはMACHINE名"bcm-2xxx-rpi4"を指定します。runqemuコマンドによりQEMUの実行が開始されると、右図のようにCUIのWind River Linuxが起動します。

ホストPC上での操作

$ runqemu bcm-2xxx-rpi4

ソースコードからビルドしたLinuxディストリビューションをQEMUで実行する(CUI)

GUIを備えたLinuxディストリビューションをビルドして実行する

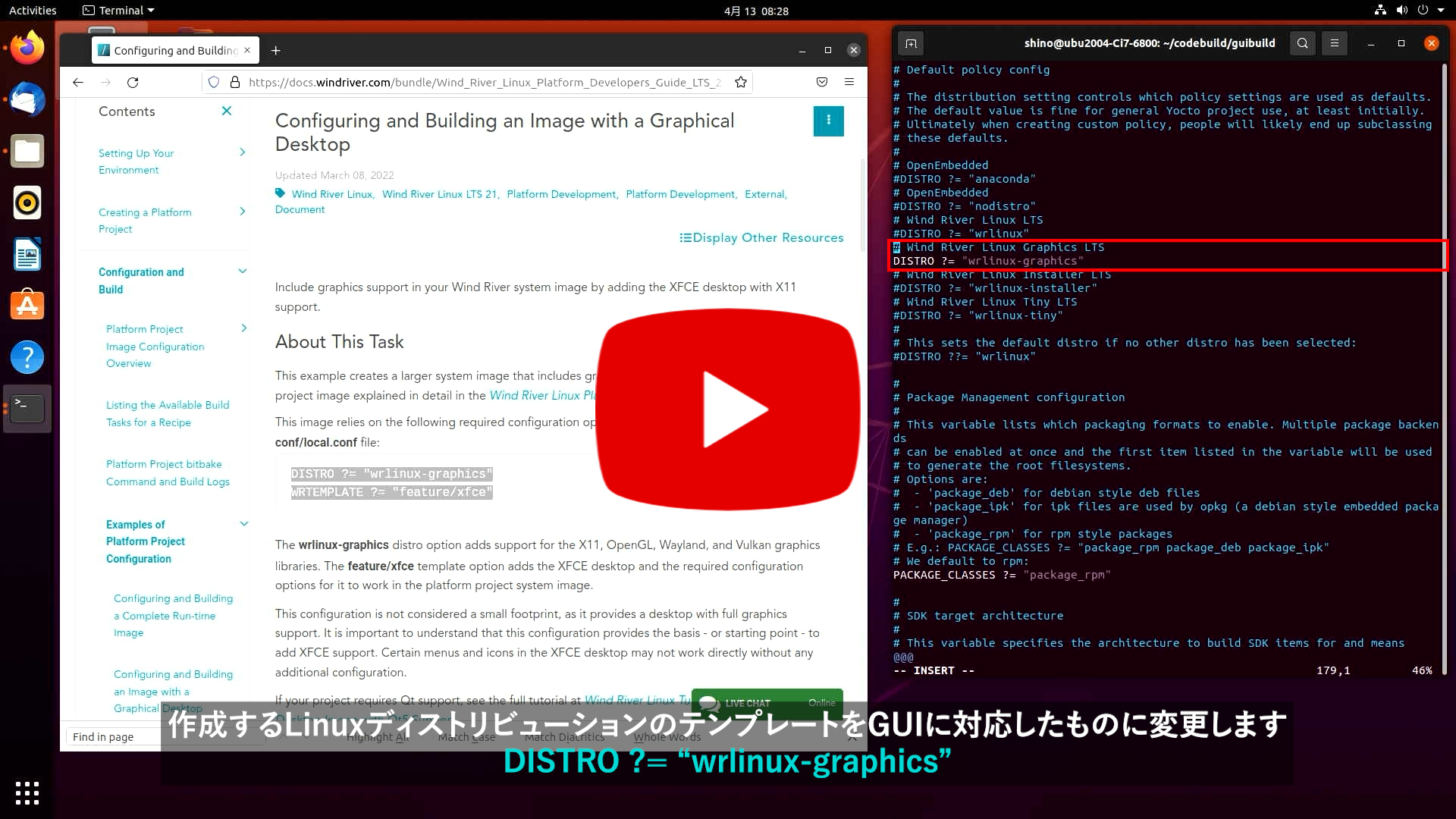

それではLinuxディストリビューションに変更を加えてみましょう。ここではGUI(XFCE)を備えたLinuxディストリビューションを作成します。GUIを実現するための画面表示機能には様々なライブラリやデータ、設定項目が絡んでくるため、大変に思うかもしれませんが、実は前述したLinuxディストリビューションの作成方法とほぼ同じです。変更は以下に示すわずか2つです。

1つ目の変更は"conf/local.conf"の内容です。GUI機能を統合するためにDISTROに"wrlinux-graphics"を指定し、WRTEMPLATEにGUI機能を提供する"feature/xfce"を指定してください。

2つ目の変更はbitbakeのオプションです。GUI機能を有効化するため"wrlinux-image-std-sato"を指定します。

以上でGUIを備えたLinuxディストリビューションを作成することができます。CUI同様に、Linuxディストリビューションのビルドにはbitbakeを使用し、実行にはrunqemuコマンドを使用します。

詳細は「Configuring and Building an Image with a Graphical Desktop」をご参照ください。

ソースコードからビルドしたLinuxディストリビューションをQEMUで実行する(GUI)

$ vi. ./conf/local.conf

BB_NO_NETWORK ?= '0'

DISTRO ?= "wrlinux-graphics"

WRTEMPLATE ?= "feature/xfce"

MACHINE ?= "bcm-2xxx-rpi4"

…

$ bitbake wrlinux-image-std-sato -c populate_sdk_ext

…

$ runqemu bcm-2xxx-rpi4

以上が、Wind River LinuxによりソースコードからLinuxディストリビューションを作成し、実行する手順です。x86アーキテクチャでの確認やHello Worldサンプルアプリケーションの作成などWind River Linuxの各種動作手順は「Wind River Linux Platform Development Quick Start, LTS 22」に記載しておりますので、ぜひダウンロードしてください。

次回の記事では、SDKを利用してLinuxアプリケーションを開発する方法について、ご紹介します。

advancing-airborne-safety-with-face-in-the-digital-battlespace - Japan

Open Group Future Airborne Capability Environment Consortiumは、2010年に設立されました。Future Airborne Capability Environment Consortium (FACE™) は、すべての軍事用航空機プラットフォームのための、オープンなアビオニクス環境を定義することを目的に、政府と産業界によるソフトウェアとビジネスの連携を図る目的で開始されました。以来、FACEの影響と意義は拡大し、防衛産業の航空防衛プラットフォームの開発・製造に活用されるオープンスタンダードとなりました。

では、FACEはどのようなオープンスタンダードなのでしょうか。

政府と産業界の協力で構築されたFACEのアプローチは、航空機の機能をより安価にし、軍用戦闘機の新機能の実用化までの時間を改善することを目的としたソフトウェアスタンダードであり、ビジネス戦略です。

FACEは、テクニカルおよびビジネスプラクティスの両方を統合することにより、アビオニクスシステム間で航空機能の容易な移植性を提供する標準的な共通オペレーティング環境を確立しています。この規格は、航空機のアーキテクチャーセグメントに要求されるものと、それらをつなぐ主要なインターフェイスを定義しています。これにより、異なるハードウェアコンピューティング環境間でソフトウェアコンポーネントを再利用し、古いソフトウェアから新しいソフトウェアへの迅速な置き換えを容易にし、アップグレードと新しいシステム両方の機能を向上させることができます。

FACEのユニークなアプローチの1つは、新しい規格ではなく、すでに市場で実績のある既存の規格であるARINC 653やPOSIXなどをベースに、それらの規格を取り入れ融合した方法を提供している点です。

FACE™による航空機の安全性

航空システムにとって、安全性は常に重要な要件です。FACEは、航空機の安全性、特にデジタルが活用される戦場においてどのような役割を果たすのでしょうか。FACEは安全規格ではありませんが、オープンスタンダードな目的とアプローチにより、新しい航空システムの構築に簡単かつ迅速に使用できるアビオニクスシステムの安全機能を開発するための最適な標準環境となっています。このため、FACEには安全システムのためのプロファイルが含まれています。安全性の工学的手法は、FACEテクニカルスタンダードの領域外であり、業界のベストプラクティスやRTCA DO-178Cのような既存の安全プロセスに委ねられています。問題は、アビオニクスシステム開発者がFACEを航空機の安全性のためにどのように活用できるかということです。

ウインドリバーは、3名のアビオニクス専門家を招き、ウインドリバーの航空宇宙・防衛ビジネス開発担当シニアディレクターのロベルト・ベッラ(Roberto Valla)の司会のもと、「Airborne Safety with FACE™ in the Digital Battlespace(デジタル戦場 におけるFACE™による航空機の安全性の向上)」について、オンラインパネルディスカッションを行いました。3名は、Open Group Future Airborne Capability Environment Consortiumとアビオニクステクノロジーおよびシステムについて幅広い知識を持つ、航空宇宙・防衛技術の専門家です。

・Collins Aerospace、アビオニクス部門システムエンジニアリング担当 アソシエイトディレクター、Levi VanOort氏

・Rapita Systems、マルチコアソリューション担当プリンシパルエンジニア、Steven VanderLeest氏

・ウインドリバー、 航空宇宙・防衛業界ソリューションズ ディレクター、Alex Wilson

上記のRapita Systems、Collins Aerospace、ウインドリバーの航空専門家が、以下の問題について議論しました。

・次世代のアビオニクススタンダードを確立するためにFACEを適用する際の考慮点

・FACE準拠のテクノロジーは互換性があるが、どのように区別したらよいのか

・FACE適合が不可欠なデジタルの世界で、新たな安全課題に取り組むにはどうすればよいのか

ウェビナーの内容は、簡単に視聴できるように4つの短い章に分割し、ウインドリバーのWebサイトに掲載しました。ご興味のある章を選択してご覧ください。

・パネラーのご紹介

・FACEの紹介/FACEの概要

・FACEの課題

・Q&A

詳しくはウェビナー「Airborne Safety with FACE™ in the Digital Battlespace」をご覧ください。https://www.windriver.com/resources/webinars/airborne-safety-with-face-in-digital-battlespace

What Is DevOps? | Wind River

What Is DevOps?

DevOps combines software development, IT operations, and quality assurance, three previously separate workflows and IT groups.

As software applications become larger and more complex, teams face an increasing need to coordinate their efforts. New teams are formed specifically for the purpose of carrying out nonfunctional work streams such as testing, development, deployment, or operations. These teams include business analysts, project managers, systems engineers, and other members who would previously have performed these functions as part of different teams.

The DevOps approach to application development brings together the development and operational teams to deliver software faster and more frequently, with the goal of satisfying customer needs better than before.

Types of Development Methods

Traditional Methods

Traditionally, software developers wrote code. When they finished, the application went through QA and then would be deployed into production by IT ops, who arranged for the servers, storage, and other needs to make the code perform as expected. In embedded systems, ops refers to the people who put the software onto the embedded system and oversee the production of whatever device the software powers.

This model worked well for the waterfall method of software development. However, developers spent relatively long periods of time perfecting the embedded system software before turning it over to ops, so the disadvantage was that it was comparatively slow.

Traditional development methods are simply too slow for today’s competitive embedded market.

Agile Methods

Agile methods introduced a new approach to software development, with dev teams working in “scrums” and pushing out releases at a faster clip. What became quickly apparent was that agile teams were creating code so rapidly that it was better to integrate the ops and QA teams into the development process. They all started to work together under the DevOps banner.

Continuous Integration/Continuous Delivery

Another consequence of DevOps relates to the mechanics of integrating and distributing the new code itself. The pace of code releases grew so quickly that only automated software could handle it. Plus, many of the code releases were just small updates to existing applications. It made little sense to do a big uninstall/reinstall routine every day (or every few hours) as new code came out of DevOps teams. To solve this problem, the practice of continuous integration (CI) and continuous delivery (CD) of code has become the norm. CI/CD tools take code and place it right into a production application, without stopping any functions from running. This is like the old “change the tire while the car is moving” concept. But here, it works.

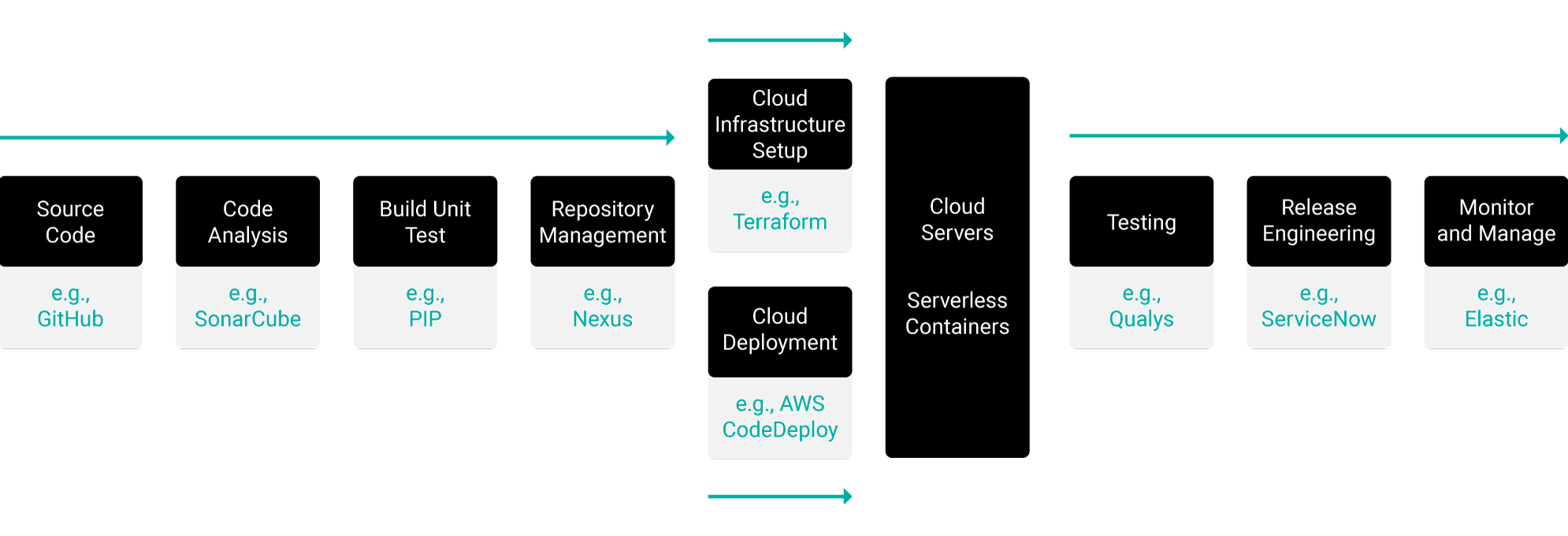

DevOps and CI/CD: A Workflow Comprising Separate but Interdependent Toolsets

Turning DevOps and CI/CD theories into practice requires toolsets that, although technically separate, are interdependent. Figure 1 shows a simplified version of the most common DevOps-to-cloud CI/CD workflow. Each step in the workflow is supported by a specific type of tool.

Figure 1. DevOps and CI/CD as a workflow that relies on numerous separate but interdependent toolsets

For DevOps and CI/CD to be successful, development, test, security, and operations teams must be able to collaborate in real time as code moves through this workflow. Their software development tools and cloud platforms must support the tools and workflow in order to make the entire process work. (The examples shown here should not be viewed as authoritative. They merely represent a small sampling from a large pool of DevOps and CI/CD technologies.)

Organizations need ways to effectively integrate portions of the embedded development process to produce better software faster.

Pushing DevOps into the Embedded Systems World

DevOps is becoming popular with embedded systems makers for a combination of positive and negative reasons. The positives include the ability to move better products to market faster. Done right, DevOps makes the once sequential development, QA, security, and operations schedules overlap to some extent — essentially becoming DevSecOps. There are fewer iterations in every cycle, so everything advances more rapidly.

CI/CD methods speed up time-to-market, improve collaboration, and produce better products … but organizations face challenges when trying to implement these new methodologies.

The negative factors that are forcing companies to embrace DevOps are largely related to personnel limitations. Developers who can write good code and who understand embedded systems are hard to find. Many design teams are facing a shortage of developers who understand embedded systems and their role in specific industries, such as aerospace or automotive.

This basic shortage of people is exacerbated by the requirement for developers to have security clearances. DevOps is critical in this context, because it enables a small, limited talent pool to produce more software than ever before.

Challenges in Implementing DevOps

Culture

Not every embedded systems company has an easy time making the move to DevOps, even when there is a strong intent to get it going. One issue that comes up is a lack of coordination between groups. Simply declaring that DevOps will be the mode of software development and release is inadequate to get teams to integrate their processes.

Effective CI/CD implementation requires a shift in management processes and company culture.

Adopting DevOps must be a revolutionary change in management processes. Team members need to be trained on the new methodologies and tools. They need the chance to ask questions and determine how these novel methods will work at their specific organization. DevOps and CI/CD are cultural shifts as much as they are technical and procedural.

Security

Security can also impede the progress of DevOps. Application security can be challenging, and speeding up the development process doesn’t automatically mitigate risk. If anything, it increases the dangers. You need to ensure security throughout the entire embedded development process.

Hardware Access

Hardware can be a barrier, too. In traditional development, the dev team had to code for a known piece of target hardware, such as a specific operating system on a familiar processor and circuit board combination. As the overall process accelerates, it gets harder to provide target hardware quickly enough for the DevOps team. Some hardware may be extremely expensive, of limited availability, or not even built yet.

Using the right tools, such as Wind River Simics®, eliminates hardware roadblocks, enabling faster development of high-quality embedded products.

How Can Wind River Help?

The Wind River DevOps Environment

Although many data center toolsets already come with DevOps and CI/CD-friendly functionality, in the embedded world most development is still being done in the traditional waterfall manner. Wind River is leading the way in modern development by providing robust agile functionality in every product release and roadmap.

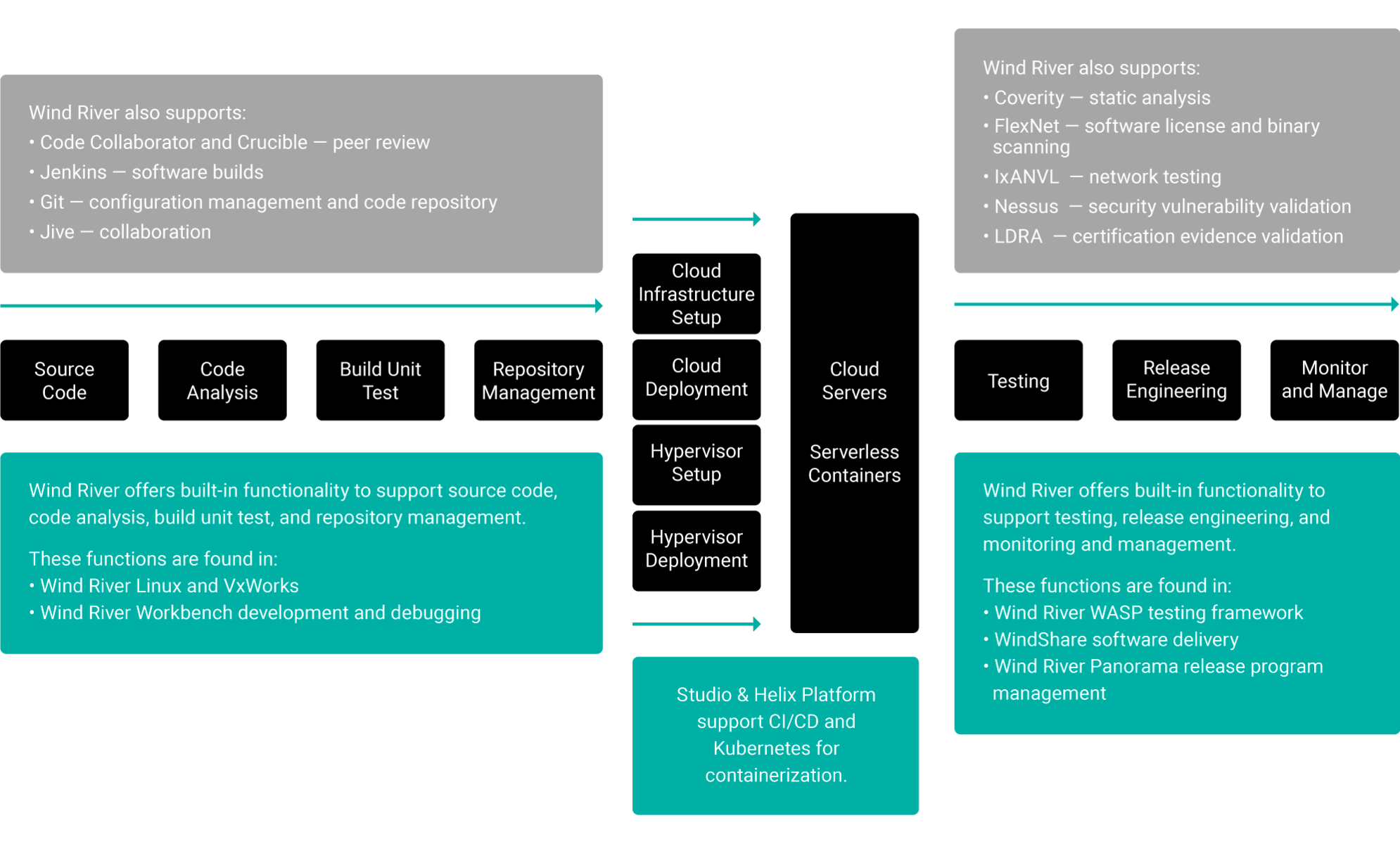

Wind River products now enable the full DevOps-CI/CD workflow. To make this successful for our customers, we started with our own dev-test-release processes. Today, products such as Wind River Linux, VxWorks®, and Wind River Helix™ Virtualization Platform are produced using our own DevOps environment. We have learned a great deal about the unique needs of these methodologies in the embedded systems context. Our insights into the process have led to an effective DevOps-CI/CD stack, as depicted in Figure 2.

Figure 2. Wind River products either directly offer DevOps and CI/CD functionality or support a range of industry-standard toolsets in each functionality area of the workflow

Wind River products now enable the full DevOps-CI/CD workflow. To make this successful for our customers, we started with our own dev-test-release processes. Today, products such as Wind River Linux, VxWorks®, and Wind River Helix™ Virtualization Platform are produced using our own DevOps environment. We have learned a great deal about the unique needs of these methodologies in the embedded systems context. Our insights into the process have led to an effective DevOps-CI/CD stack, as depicted in Figure 2.

DevOps with Wind River Products

Wind River Linux and VxWorks

The Wind River commercially supported Linux operating system and accompanying dev-test toolsets enable quick embedded development from prototype to production. VxWorks, the industry’s leading real-time operating system (RTOS), offers comparable functionality. Both support the standard functions in the development stage of the DevOps workflow. These include source code creation, code analysis, build and unit test, and repository management. If our customers have their own preferred tools, Linux and VxWorks support such tools as Jive, Git, and Jenkins to allow for additional functionality.

Wind River Linux also supports CI/CD pipeline tools such as OSTree, an upgrade system for Linux-based operating systems, which facilitates deployed capability updates. It performs the kind of atomic upgrades of complete file system trees that are needed to perform CI/CD, i.e., change the tire while the car is moving.

At runtime, both Wind River Linux and VxWorks embody qualities that make them ideal for DevOps and CI/CD in the embedded space. This includes the capability of working with container technology to enable rapid application and microservices development and deployment via embedded systems DevOps.

Abstraction of App Code from the OS

Abstraction of application code from the underlying operating system and hardware stack is an essential factor in making DevOps-CI/CD work. Changes to application code are frequent in DevOps-CI/CD. If a new build has issues, it can quickly be rotated out of production and fixed.

The OS layer is not so forgiving, especially when changes are occurring in a live production environment. For this reason, VxWorks supports industry standard abstraction frameworks. This is particularly important in embedded systems that must conform to tightly controlled industry standards. Without this support, it would be nearly impossible to use DevOps for real-time embedded systems.

Essential to DevOps-CI/CD is the ability to abstract application code from the underlying OS and hardware stack.

Industry standards supported by VxWorks include:

- Robot Operating System (ROS2): Software libraries and tools for creating robotic applications.

- Adaptive AUTomotive Open System ARchitecture (AUTOSAR): A worldwide development partnership of automotive entities that creates an open and standardized software architecture for automotive electronic control units (ECUs).

- The Open Group’s Future Airborne Capability Environment (FACE™) Technical Standard: An open real-time standard for avionics that makes safety-critical computing operations more robust, interoperable, portable, and secure.

WIND RIVER HELIX VIRTUALIZATION PLATFORM and Wind River Studio Cloud Platform

Embedded systems developers using the DevOps-CI/CD workflow can deploy their code onto Helix Platform or Wind River Studio Cloud Platform. Helix Platform enables a single-compute system on edge devices to run multiple OSes and mixed-criticality applications. This approach is becoming increasingly popular with embedded systems makers who want to put abstraction to work, updating one application on a piece of hardware without having to reinstall the OS.

Studio Cloud Platform is a production-grade Kubernetes solution. It is designed to make 5G possible through the solving of operational problems related to deploying and managing distributed edge networks at scale. Kubernetes support makes it possible for embedded systems DevOps teams to perform CI/CD on individual containers. Studio also supports virtualization as well as a range of operating systems, including Linux, VxWorks, and others.

Wind River Simics

Simics, a full-system simulator, solves the problem of hardware access. Its advanced software can replicate the functionality of many kinds of hardware and operating systems. It can also model an array of peripherals, boards, and networks. This technology allows developers and QA teams to code for a piece of hardware they don’t have or that may not even exist. For instance, Simics can mimic hardware functions based on the “tape up” of a proposed circuit or board.

Simics enables developers, QA, and ops teams to model large, interconnected systems. For example, teams can show how a piece of software will run on multiple combinations of devices, architectures, and operating systems. Once your developers have created a model of a system in Simics, you can simulate many different operational scenarios, such as deterministic bug re-creation.

These capabilities, paired with built-in collaboration tools, help radically speed up dev, QA, and ops processes. The dev and QA teams don’t have to spend time setting up physical development labs, and the ops teams get an advance look at how the hardware deployment will work. The result is higher-quality code that’s easier to support — because it’s already been tested in many different potential configurations.

Testing and Monitoring Tools

As the DevOps-CI/CD workflow releases code into production, either on Helix Platform or Studio, Wind River tools provide essential functions for testing and monitoring the code. Wind River WASP is a proven testing framework for code entering production. Wind Share manages software delivery, while Wind River Panorama facilitates the release program. In addition, Wind River supports industry-standard tools for many of these functions, including Coverity for static analysis, Nessus for security vulnerability scanning, and Achilles for robustness testing.

Wind River Professional Services

Wind River® Professional Services puts security first. We offer an innovative approach by combining extensive security expertise with industry-leading software and solutions. We start with a comprehensive assessment to determine how to ensure security across the development process.

Our Professional Services team uses the assessment to identify how to assist you with:

- Design: Determine and identify potential issues before any code is written.

- Implementation: Review and optimize software configurations and settings before testing.

Our team brings decades of experience in hardening the security around embedded devices to protect them from cybersecurity threats.

- Testing: Make improvement recommendations after the code has been written but before it is deployed in the field.

- Post-deployment: Identify continuous improvement opportunities that don’t require platform changes once devices are deployed. Some security enhancements can be completed through organizational measures and corresponding controls.

DevOps FAQs

James Webb Space Telescope Successfully Reaches L2 Orbit - Japan

ジェームズ・ウェッブ宇宙望遠鏡がL2軌道に無事到着ウインドリバーのテクノロジーが、宇宙での新たなマイルストーン達成に貢献

Jan 24, 2022 • 航空宇宙・防衛著者:Paul Parkinson/ポール・パーキンソン

究極のインテリジェントシステムである、ジェームス・ウェッブ宇宙望遠鏡がL2軌道へ無事到着したことを大変嬉しく思います。NASAゴダード宇宙飛行センター、おめでとうございます!

ジェームス・ウェッブ宇宙望遠鏡は、打ち上げ成功から1カ月で、150万キロを移動してL2軌道に到達しました。準安定軌道にはいると、宇宙初期の最も遠い観測可能な銀河を過去に遡って眺め、その進化と星や惑星系の誕生を明らかにするというミッションの次のエキサイティングな段階を開始します。

これまでジェームス・ウェッブ宇宙望遠鏡は、打ち上げ、ロケットからの分離、折り畳んでいた太陽電池パネルの展開、軌道修正、望遠鏡本体を太陽光などから守る日よけの展開、主鏡と副鏡の展開という多くの重要なステップを経てきました。これらの成功はすべて、NASAとそのパートナー、そして科学者やエンジニアの専門知識と貢献の賜f物です。宇宙旅行の時代では、宇宙が過酷で容赦のない環境であることを忘れがちですが、2度目のチャンスは無いためミッションを課せられたシステムには失敗は許されません。

VxWorksは数多くの宇宙開発プログラムに導入されています。今回のジェームス・ウェッブ宇宙望遠鏡にもVxWorksが採用されており、ISIM(統合科学機器モジュール)の耐放射線プロセッサ上で動作するISM科学ペイロードアプリケーションにOSサービスのレイヤを提供しています(NASA ISIMカンファレンスペーパー図21参照)。私たちは今回の重要なミッションでのVxWorksの役割を誇り思います。安全性、セキュリティ、信頼性は、ウインドリバーのDNAの一部です。このDNAは、Wind River Studioの一部であるVxWorksリアルタイムOS(RTOS)の開発と進化を後押ししています。

ISIMには、近赤外線カメラ(NIRCam)と近赤外線スペクトログラフ(NIRSpec)、中赤外線機器(MIRI)など複数の機器が収められています。これらが、宇宙の絶え間ない膨張によって電磁スペクトルの赤方偏移を受けた、最も遠い観測可能な銀河や最初の発光天体からの画像データを処理します。

ウインドリバーは、NASAのこの素晴らしい成果を皆さんとともに祝い、これからの科学の進歩と、「我々はCosmos(宇宙)をありのままに理解しなければならず、宇宙がどのようなものであるかを、私たちがどのようであってほしいと望むことと混同してはいけない」という、故カール・セーガンが著書「Cosmos」の中で提起した課題に答えることを期待しています。

ウインドリバーは約30年にわたり、NASAに最も実績のあるソフトウェアプラットフォームを提供しています。数多くのインテリジェントシステムを宇宙に送り出し、史上最も重要な宇宙ミッションを実現しています。ウインドリバーのテクノロジーが宇宙で果たしてきた役割と実績の詳細については、以下をご覧ください。

https://www.windriver.com/japan/inspace