What Is Virtualization?

Virtualization in systems development is the creation of software that simulates hardware functionality. The result is a virtual system that is more economical and flexible than total reliance on hardware systems.

Virtualization is enabled by hypervisors that communicate between the virtual and physical systems and allow the creation of multiple virtual machines (VMs) on a single piece of hardware. Each VM emulates an actual computer and can therefore execute a different operating system. In other words, one computer or server can host multiple operating systems.

Because of its versatility and efficiency, virtualization has gained significant momentum in recent years. What’s more, many of today’s industrial control systems were installed 30 years ago and are now outdated. While that legacy infrastructure provided a stable platform for control systems for many years, it lacks flexibility, requires costly manual maintenance, and does not allow valuable system data to be easily accessed and analyzed.

Versatile and efficient, virtualization overcomes the limitations of legacy systems.

Virtualization overcomes the limitations of legacy control systems infrastructure and provides the foundation for the Industrial Internet of Things (IIoT). Control functions that were previously deployed across the network as dedicated hardware appliances can be virtualized and consolidated onto commercial off-the-shelf (COTS) servers. This leverages the most advanced silicon technology and also reduces capital expenditure, lowers operating costs, and maximizes efficiency for a variety of industrial sectors, including energy, healthcare, and manufacturing.

For developers of embedded systems, virtualization brings new capabilities and possibilities. It allows system architects to overcome hardware limitations and use multiple operating systems to design their devices, while bringing high application availability and exceptional scalability.

Changes in Embedded Systems

Drivers of change in embedded systems design include improvements in hardware as well as the continuing evolution of software development methods. At the hardware level, it’s now possible to do more with a single CPU. Rather than host just one application, new, multi-core systems-on-chip (SoCs) can support multiple applications on a single hardware platform — even while maintaining modest power and cost requirements. At the same time, advances in software development techniques are producing systems that are more software-defined and fluid than their predecessors.

The more things change, the more they stay the same. The core requirements for embedded systems are not going away:

- Security: Cyberattacks have become more common, while completely isolated systems are becoming rarer. Embedded engineers are taking security more seriously than ever before.

- Safety: The system must be able to ensure that it does not have an adverse effect on its environment, whatever that might be. Systems in the industrial, transportation, aerospace, automotive, and medical sectors can cause death or environmental disaster if their embedded systems malfunction. Because of this, determinism — predictability and reliability of performance — is of paramount importance. A failure in one zone should not trigger a failure of the entire system.

While there are many changes in the embedded systems world, the core requirements have remained the same: Embedded systems must be secure, safe, reliable, and certifiable.

- Reliability: An embedded system must always perform as expected. It should produce the same outcome, in the same time frame, whether it is activated for the first or the millionth time. “Not enough” or “too late” are not options in systems that cannot fail.

- Certifiability: The certification process is a critical and costly part of development for many embedded systems. Certification in legacy systems must be maintained and leveraged, while ease of certification for future systems must be ensured.

Bridging Embedded and Cloud-Native Technologies

Right now, many manufacturers are facing end-of-life for their legacy embedded systems. These decades-old systems may be insecure, unsafe, or unable to meet current certification requirements. They need to be upgraded or replaced, which is expensive — and sometimes not even possible.

At the same time, the workforce is changing. The engineers who built the original design are retiring, and the new workforce has been schooled in different approaches.

Arguably, an even bigger problem is posed by requirements for shortening development cycles. While it may once have been viable to take a year or more to create a fixed-function embedded system on a distinct piece of hardware, today’s economy demands a quicker time-to-market.

Nonetheless, many legacy embedded systems are here for the long term — 35–45 years is not an uncommon lifecycle for many industrial systems. They may not be modern, but the machines they run were built to last. Industrial control systems, for example, could have multi-decade lives, even if their digital components are hopelessly out of date.

Advantages of Virtualization in Embedded Systems

Fortunately, advances in hardware and virtualization have occurred even as challenges have beset the world of embedded systems. It is now possible to overcome most of the difficulties inherent in having separate, purpose-built embedded systems running on separate proprietary hardware. This is achieved by consolidating each separate embedded system, with its application and operating system, into its own virtual machine, all on a single platform and hardware architecture.

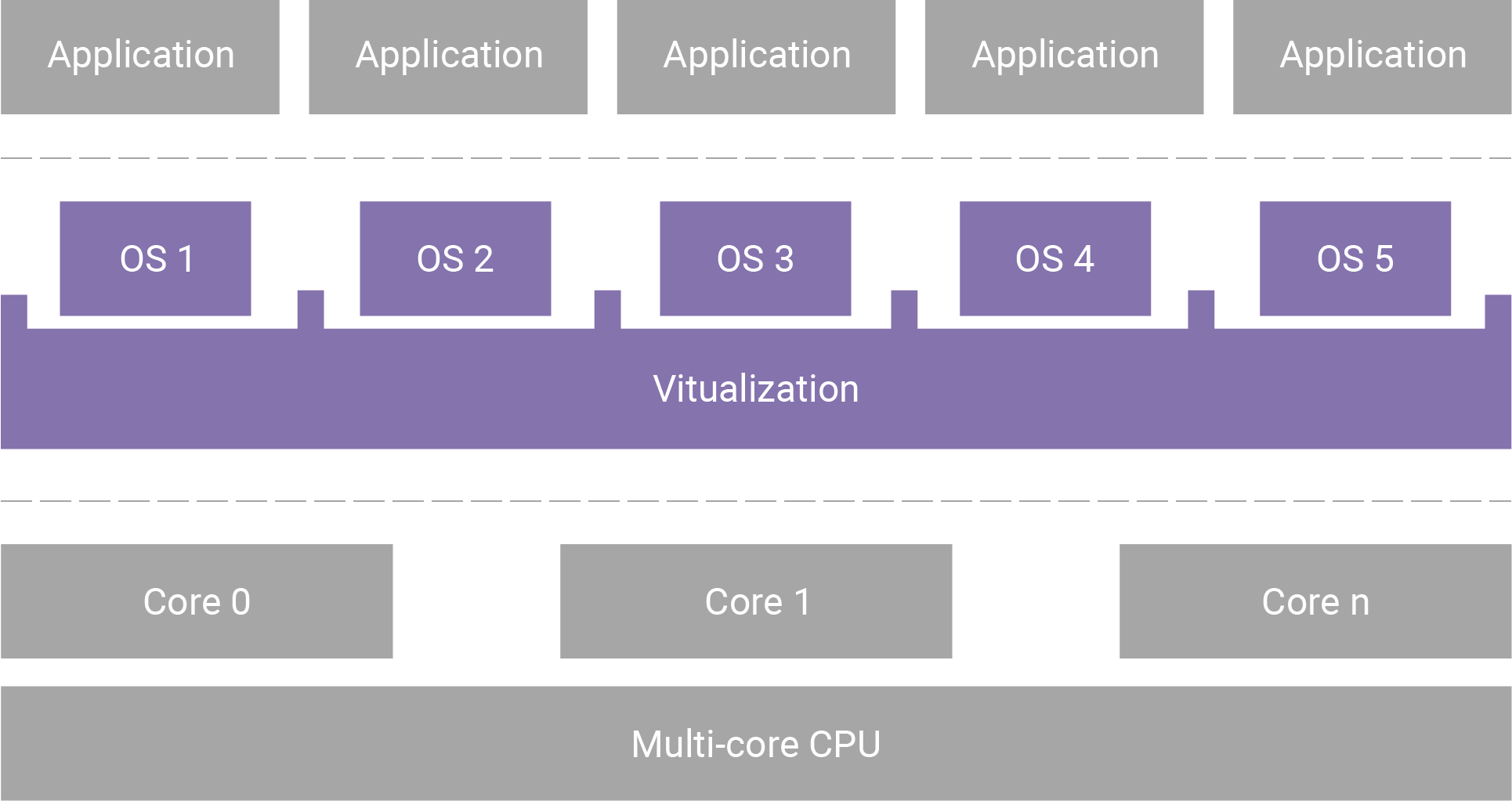

As depicted in Figure 1, virtualization can place multiple embedded systems, each running its own OS, on one multi-core silicon hardware system. Advances in silicon design, processing power, and virtualization technology make this possible. The same silicon can host more than one version of Linux along with multiple RTOSes and other common legacy OSes.

Figure 1. Reference architecture for multiple embedded systems running on a single processor using virtualization

Virtualization succeeds in abstracting the embedded system application and its OS from the underlying hardware. As a result, it becomes possible to overcome many of the most serious challenges arising from legacy embedded systems. The advantages for engineers include:

- A significant increase in scalability and extensibility

- Support for open frameworks and reuse of IP across devices

- The ability to build solutions on open, standardized hardware that offers more powerful processing capabilities

- Simplification of design and accompanying acceleration of time-to-market

- Application consolidation within the device, which reduces the hardware footprint and costs related to the bill of materials (BOM)

- A gradual learning curve, given a familiar OS and programming languages that can be deployed in the virtualized system

- The ability to run multiple OSes and applications side by side

- Isolation of each operating system and application instance, providing additional security and allowing both safety-certified operating environments and “unsafe” applications

- Easier upgrades via new methodologies such as DevOps, which simplifies the quick extension of new features

- Faster response to security threats

How Can Wind River Help?

Wind River Studio

Wind River® Studio is the first cloud-native platform for the development, deployment, operations, and servicing of mission-critical intelligent edge systems that require security, safety, and reliability. It is architected to deliver digital scale across the full lifecycle through a single pane of glass to accelerate transformative business outcomes. Studio includes a virtualization platform that hosts multiple operating systems, as well as simulation and digital twin capabilities for the development and deployment process.

» Learn More

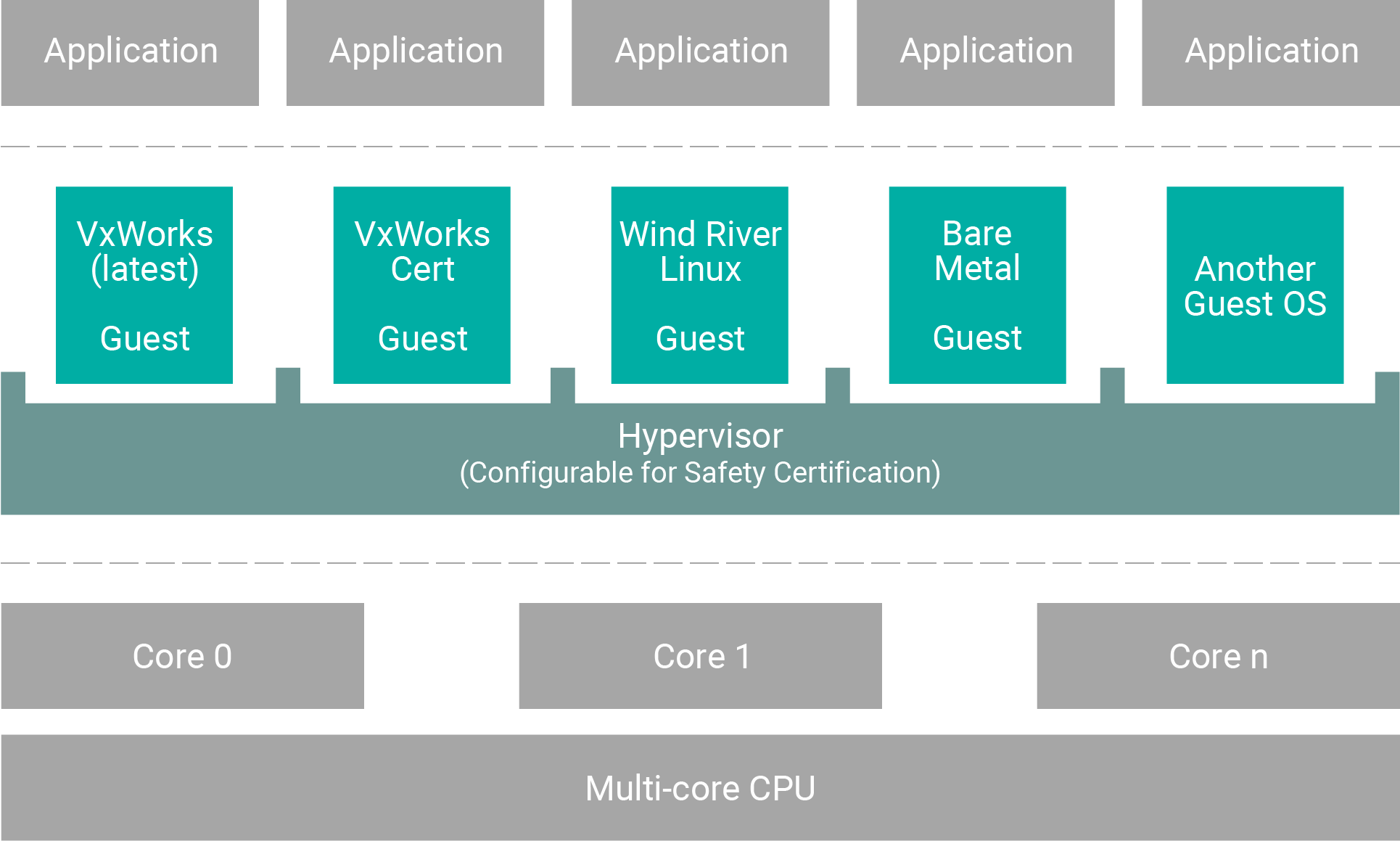

Figure 2. Helix Platform architecture

Virtualized Os Platform

Studio includes a virtualization solution powered by Wind River Helix™ Virtualization Platform, a safety certifiable, multi-core, multi-tenant platform for mixed levels of criticality. It consolidates multi-OS and mixed-criticality applications onto a single edge compute software platform, simplifying, securing, and future-proofing designs in the aerospace, defense, industrial, automotive, and medical markets. It delivers a proven, trusted environment that enables adoption of new software practices with a solid yet flexible foundation of known and reliable technologies on which the latest innovations can be built.

As part of Studio, Helix Platform enables intellectual property and security separation between the platform supplier, the application supplier, and the system integrator. This separation provides a framework for multiple suppliers to deliver components to a safety-critical platform.

The platform provides various options for critical infrastructure development needs, from highly dynamic environments without certification requirements to highly regulated, static applications such as avionics and industrial. It is also designed for systems requiring the mixing of safety-certified applications with noncertified ones, such as automotive. Helix Platform gives you flexibility of choice for your requirements today and adaptability for your requirements in the future.

» Learn MoreSimulation/Digital Twin

Studio cloud-native simulation platforms, allow you to create simulated digital twins of highly complex real-world systems for automated testing and debugging of complex problems. Digital twins allow teams to move faster and improve quality, easily bringing agile and DevOps software practices to embedded development.

» Learn More About Studio Digital Twin Solutions