What Is Distributed Cloud?

Distributed cloud is an architecture that enables providers to reach customers in a geo-distributed environment and offer them latency-sensitive and location-based services. It includes centrally managed distribution of services running public cloud infrastructure for public as well as private hybrid cloud or multi-cloud environments. Running a geo-distributed cloud ensures that you can best meet requirements for performance, compliance, and edge computing needs.

Cloud Computing

Cloud computing refers to the availability of computer system resources, including storage and compute power, that is available on demand and without active management by the user. It includes the availability of various networked elements such as content, storage, databases, compute, security, etc., that may be accessed from the user’s computer, tablet, phone, or system.

Distributed cloud can support functions such as the radio access network.

Is Cloud Computing a Distributed System?

Cloud computing can be a distributed system having functions distributed across multiple data centers. Sharing resources within a data center, and in some cases between data centers, allows the cloud service provider to pool resources and achieve economies of scale.

The Difference Between Distributed Computing and Cloud Computing

Cloud computing and distributed computing both benefit from resource pooling and on-demand resource availability, and they may both have distributed elements. But distributed cloud combines elements of the public cloud, a hybrid cloud, and edge computing to push compute power — and the advantages of cloud computing — closer to the user. As a result, the user can take advantage of use cases that are latency sensitive or location dependent, effectively increasing both the range and the use cases for the cloud.

Cloud Computing Types

| Private Cloud | Public Cloud | Hybrid Cloud |

|---|---|---|

| A private cloud is one that is created and run internally (privately) by an organization. It may also be purchased, stored, and run within an organization but managed by a third party. The key point is that it is computing that is dedicated to the organization. | Public cloud, in contrast to private cloud, has no physical infrastructure located or running internally. Data and applications are delivered by the internet, run externally to the organization. While the applications and data may be private, public cloud infrastructure is shared across organizations. | Hybrid cloud, as the name implies, creates an environment that uses both public and private clouds. Hybrid cloud is commonly used as a strategy to leverage the benefits of private cloud (i.e., secure data storage) coupled with the benefits of public cloud (resource pooling, proximity to users, and scalability). |

Distributed Cloud in Telecommunications

As communications service providers evolve their networks, one strategy is to disaggregate the network functions that previously existed in a purpose-built piece of equipment supplied by a telecom equipment manufacturer (TEM). This disaggregation, also known as decoupling, separates the components of hardware, infrastructure, and applications into their separate pieces. In order to effectively manage resources and workloads, these components are all part of a distributed cloud network. This disaggregated, distributed model is applicable to a number of edge use cases.

Radio Access Network (RAN)

The radio access network (RAN) is the network infrastructure used to connect wireless users to the equipment in a core network. It includes a radio unit (RU) that transmits, receives, and converts signals for the RAN base station. The baseband unit (BBU) then processes the signal information so it can be forwarded to the core network. Sending a message back to the user reverses this process. Since a mobile network needs multiple sites to be functional, this network could be considered distributed RAN (D-RAN) by default. Historically, these functions have been performed by equipment that was purpose built solely for those functions. Today the BBU is being broken up into a distributed unit (DU) and a central unit (CU), which allows for more flexibility in the RAN infrastructure design while reducing the cost of deployment. Distributed cloud architecture has a vital role to play.

Cloud RAN (C-RAN)

One variety of RAN is cloud RAN, or centralized RAN, which includes a centralized BBU pool, remote RU (RRU) networks, and transport. By locating a BBU pool at a central point, C-RAN provides RRUs the resources needed as well as OpEx and CapEx advantages through economies of scale.

Virtualized RAN (vRAN)

Virtualized RAN (vRAN) is a version of C-RAN that goes a step further by virtualizing the BBU pool or the DU-CU functions and running them as software on commercial off-the-shelf servers.

Open RAN (O-RAN)

The next step from vRAN is Open RAN, or O-RAN. While vRAN may be a proprietary solution with all components bought from the same vendor, Open RAN provides specifications to open the interface between RRU and DU as well as DU and CU.

Multi-access Edge Computing (MEC)

Architecting and deploying virtualized and open RAN using a scalable distributed cloud infrastructure provides the foundation to layer in multi-access edge computing (MEC), formerly referred to as mobile edge computing. MEC provides cloud computing at the edge of the cellular (or any) network. MEC provides compute resources to enable low-latency, location-specific applications while reducing network congestion by performing processing tasks and running applications closer to the user.

Distributed Cloud Architecture

Layers of Distributed Cloud

In the context of a distributed cloud for an Open RAN network, there are three important layers:

Regional Data Center

Regional data centers are large edge data centers. They provide an aggregation point and are typically in or near large cities to serve a large population. Typically, a telecom data center is owned by a single telecommunications service provider, but in some cases the data center is run within a colocation data center. These centers are responsible for driving content delivery, cloud, and mobile services. The regional data center is connected to the edge cloud by a backhaul network.

Several necessary layers make up distributed cloud for an O-RAN network.

Edge Cloud

The edge cloud is the site of a central regional controller that deploys and manages the various subclouds. This site runs the CU as well as other workloads such as analytics and the near-real-time RAN intelligent controller (RIC). It could include a centralized dashboard, security management, container image registry, lifecycle management, and other functions. The centralized edge cloud is connected to the far edge by the midhaul network.

Far-Edge Cloud

The far-edge cloud runs the DU and, as the network evolves, will need to be able to scale up and run the CU plus MEC applications. The far edge is connected to the RU through the fronthaul network.

Distributed Cloud Platform

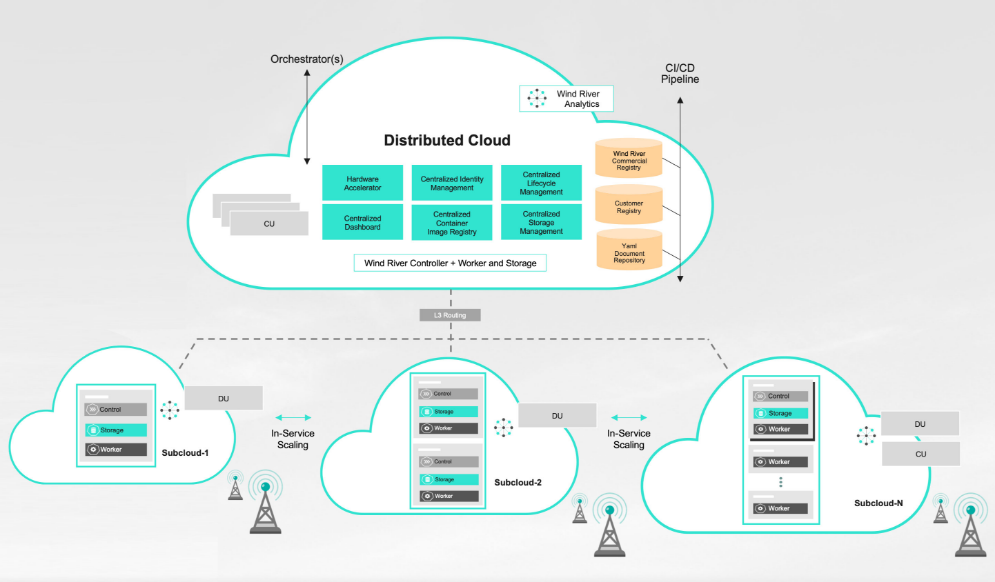

The diagram below shows how a scalable, disaggregated Open RAN architecture could be deployed using Wind River® Studio. The largest cloud represents the central, regional controller that lets the operator deploy and manage subclouds for the different edge and far-edge sites. The central controller runs workloads such as the CU, analytics, real-time RIC, etc. The smaller clouds represent the network subclouds that need to be able to scale from a very small single node at the far edge (left) to a multi-node edge site (right). The size and resources needed by the site are determined by the limitations of the physical site and by the need and ability to run workloads such as the DU, and in some cases the CU or perhaps MEC or other applications.

Figure 1. A scalable, disaggregated O-RAN architecture using Wind River Studio

Distributed Cloud Key Attributes

New 5G and edge use cases depend heavily on low-latency response times.

Reliability

Telecommunications networks require remarkably high uptime, typically 99.999% (five nines), and this has been delivered with legacy equipment. As a distributed cloud O-RAN model is deployed, it is incumbent on each component of the solution to maintain that uptime. The infrastructure must deliver better than five nines for service providers to ensure that they meet their customer service-level agreements (SLAs).

Low Latency

New 5G and edge use cases depend heavily on low-latency response times. Delivering low latency is a key driver for pushing compute to the edge. As with reliability, the infrastructure must provide ultra-low latency to provide latency budget for the application and hardware. Plus, in the case of distributed RAN, the availability of lower, deterministic DU latency means the service provider can be more efficient with resource pooling and get more coverage with fewer sites.

Scalability (Dynamic Scaling)

A key driver for moving to a distributed cloud model is to enable flexibility in deployment and cost savings. A critical point in this pursuit is the ability for the network to grow as new services and capacity need to be added. Dynamic scaling allows edge sites to go from supporting a single DU to supporting multiple DUs, possibly a CU, plus MEC or other applications without needing to take the network down.

Flexibility

Distributed cloud gives the service provider a wide range of options for deploying their network, depending on need. They may only need a single node to run a far-edge site, or two nodes for high-availability applications. Or they may need additional subclouds at a given site to meet the demand. Flexibility also expands to the technology provider. With an O-RAN distributed model, the service provider can swap out components of the solution as needed instead of being stuck with a single provider for everything.

Security

For a distributed cloud, integrated software security features will be specific to network edge environments where there may be limited physical security. Some examples of necessary security features include certificate management, Kata Containers support, and signed container image validation.

Disaggregation

Disaggregation refers to the decoupling of hardware, infrastructure, and application layers. Traditionally, telecommunication equipment was delivered with all components integrated into one machine. While these machines were exceptionally good at what they did, the service provider was locked into that vendor for all components, essentially for the life of the network. By disaggregating the components, the service provider can select best-of-breed components for specific needs. And when it comes time to add a new application or component, providers have the flexibility to add it, instead of needing to work with the original vendor to determine whether that component is even available.

Automation

Cloud computing is already complex, and when it becomes distributed and disaggregated, the complexity is multiplied. To keep control of OpEx, the service provider will rely on automation to perform tasks such as zero-touch infrastructure deployment, software patching, and hitless updates. Adding new services needs to be an automated procedure that allows the operator to select a configured application or set of applications and deploy them when and where they are needed automatically.

Looking Forward: Opportunities and Revenue Potential

The right distributed edge cloud infrastructure is the cornerstone for successful 5G. Technology that meets the fundamental 5G requirements allows you to scale as needed without consuming an abundance of compute resources, and the fact that it can be managed effectively from a central location is critical. This is not going to be achieved by retrofitting legacy cloud technology that was intended for a data center.

Telco providers need solutions that include extremely high levels of automation for ease of deployment. They need analytics to know what is happening in the network, and single-pane-of-glass visibility in order to manage the network. They also need automation for zero-touch provisioning to ease the burden of managing the network, along with live software updates to keep networks up-to-date without interruption.

Operator Technology Challenges

Operators need to evolve their networks in order to drive new revenue from new services and new customers, and they must also keep their costs under control. When considering technology for edge use cases such as the RAN, there are three key factors they must consider:

Total Cost of Ownership

When considering the cost of a solution, the service provider needs to factor not just the cost of the individual piece of technology but all the pieces combined — the total cost of ownership (TCO). This includes elements such as the radio unit, radio software, O-cloud hardware and software, rackspace, power, etc. With thousands or tens of thousands of nodes needed for an edge network, maintenance and power costs rise exponentially. Studio resource consumption optimizations free up capacity at the edge so you can do more — while spending less on power and maintenance.

Coverage Efficiency

One element of TCO is coverage efficiency. 5G operators need to deliver maximum coverage with the fewest possible hardware resources. Studio provides the needed compute for each coverage node, with fewer capacity investments thanks to enhanced resource utilization. This is achieved by industry-leading low latency, which enables greater coverage density with fewer sites.

Manageability

Managing hundreds or thousands of edge sites is not feasible without automation. Operators need automation and orchestration features to manage the extensive scale of a distributed cloud deployment. The zero-touch operations and rich orchestration/automation features included in Studio simplify management, even at 5G’s scale and density.

How Can Wind River Help?

Wind River provides a distributed cloud platform for Open and virtual RAN networks used by telecommunications companies. Those networks need to be flexible enough to easily scale as new use cases beyond O-RAN and vRAN are added (MEC, massive machine-type communications, IoT, and more).

Wind River Studio

Wind River Studio is the first cloud-native platform for the design, development, operations, and servicing of mission-critical intelligent edge systems that require security, safety, and reliability. Studio is architected to deliver digital scale across the full lifecycle through a single pane of glass to accelerate transformative business outcomes. Studio operator capabilities include an integrated cloud platform unifying infrastructure, orchestration, and analytics so operators can deploy and manage their intelligent 5G edge networks globally. The core operator capabilities of Studio include:

Wind River offers a distributed cloud platform for O-RAN and vRAN networks used by telecommunications companies.

Wind River Studio Cloud Platform

Starting with a distributed cloud, Studio provides a production-grade Kubernetes cloud platform for managing edge cloud infrastructure. Based on the open source StarlingX project, Studio compiles best-in-class open source technology to deploy and manage distributed networks.

Wind River Studio Conductor

Studio provides one platform to achieve complete end-to-end automation. Pick the applications you need from an app catalog, deploy them to a carrier-grade cloud platform, and orchestrate the resources needed for the applications simply, intuitively, and logically. Scale from a handful of nodes to thousands of nodes in a geographically dispersed distributed environment.

Wind River Studio Analytics

Once a distributed cloud is deployed, Studio supports effective management of a distributed cloud system by consuming and processing data through machine-learning algorithms to produce meaningful insights for decision-making. With full-stack monitoring of the cloud infrastructure cluster and services, Studio collects, analyzes, and visualizes cloud behavioral data so you can keep your cloud up and optimized while reducing operational costs.