8 Factors for Effectively Using Simulation at the Intelligent Edge

Executive Summary

The software simulation market has been growing and evolving for more than a decade. The use of simulation in embedded software development helps organizations achieve faster time-to-market, lower development costs, and enhance safety features.

Eight critical factors influence the choice and use of simulation tools. In this paper, we discuss what developers should consider when deciding on a simulation process to accelerate timelines and advance an organization’s goals.

The Case for Simulation

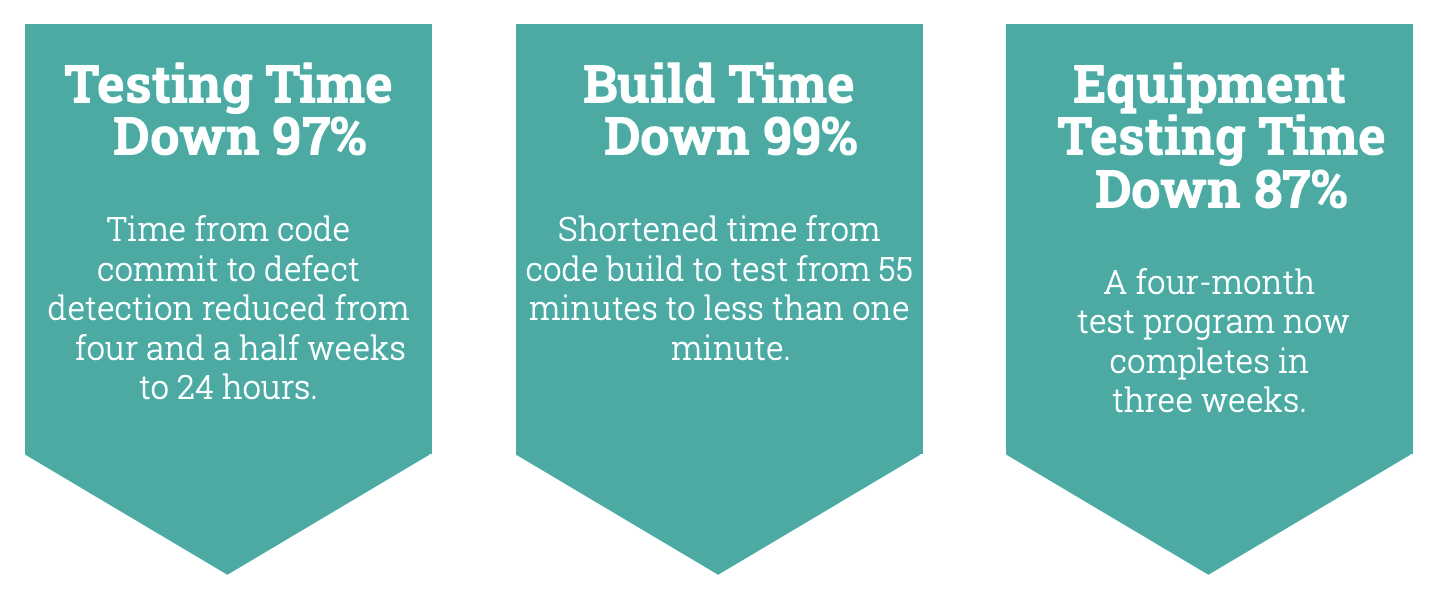

Imagine a typical four-month test program completed in just three weeks, or the average time from code commits to defect detection reduced by 97% — from 4.5 weeks to 24 hours. Imagine shortening the time from code build to test from 55 minutes to less than one. Or a two-year test completed in three days, a 99% percent reduction.

These are all real results from customers who have leveraged today’s simulation technologies and capabilities.

Simulation is becoming a crucial element of efficient and nimble software development processes. It reduces CapEx and OpEx and decouples software and hardware. It helps software developers and designers identify and resolve issues early in the development cycle while simultaneously reducing development time and lowering costs.

When you’re using a virtual target in a simulation, there’s no need to book a lab or equipment, since teams can use the virtual target to test anything at any time. Producing digital twins through simulation allows continuous testing, and insertion of faults for testing without risk of damaging the hardware. It supports maintenance and upgrades of designs whose service life is so long that its embedded systems have outlived their market availability — think of satellites orbiting Earth, for example.

These and many other use cases make a strong case for using simulation tools.

Three Approaches to Simulation

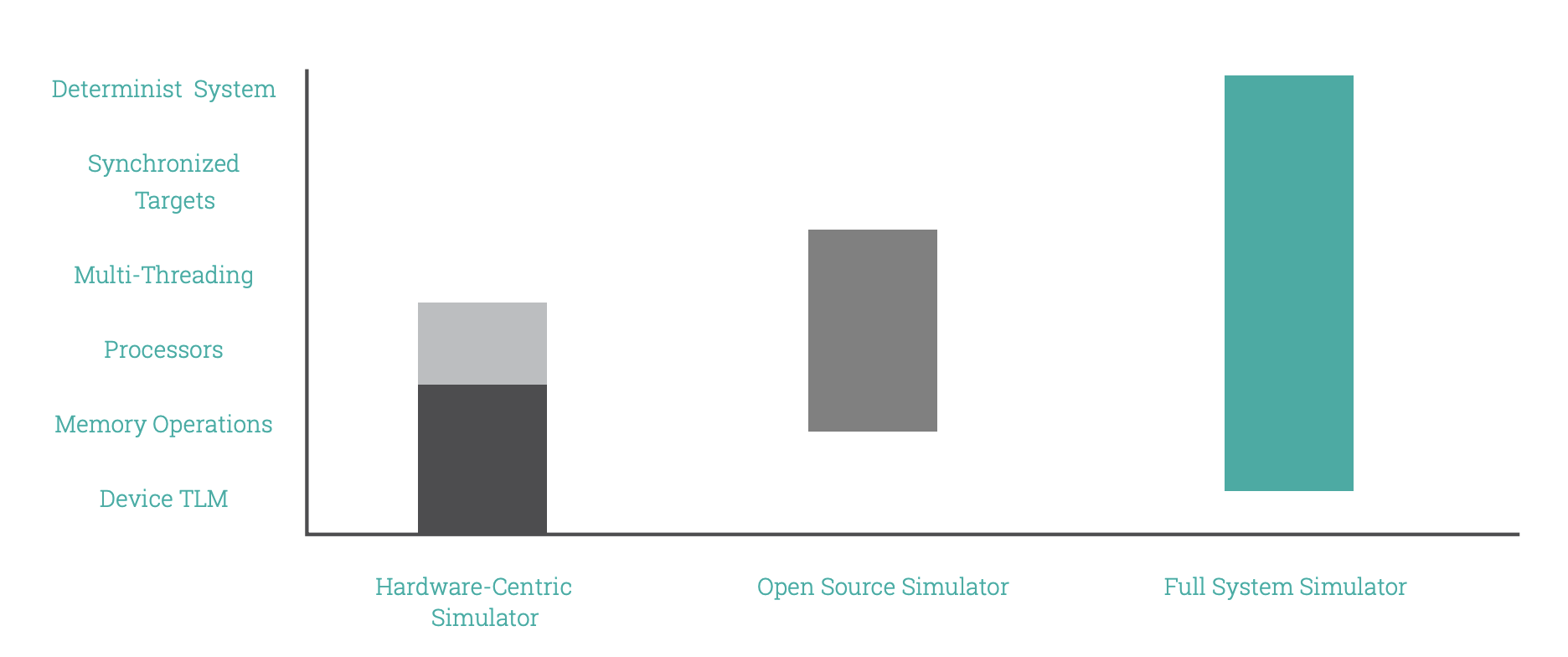

Simulation tools take one of three different approaches, so it’s important to understand how each supports your goals.

1 . Hardware-centric Simulator:

This approach builds from the bottom up, creating a detailed and highfidelity device model by simulating memory operation with a third-party processor instruction set simulator on top. Typically, these are high-fidelity simulators. One downside, however, is that they run slowly.

2. Full-System Simulator:

A full-system simulator takes a top-down approach. It can simulate multiple targets synchronously to create your own version of digital twins. It is software-centric, taking advantage of multi-threading, and it simulates the processor as well as the memory operation. The disadvantage, however, is that full-system simulations don’t come down to the low-level device or cycle-accurate simulation level.

3. Open Source Simulator:

Sitting somewhere between the hardware-centric simulator and the fullsystem simulator is the open source simulator. Its design simulates a single-board system. It does not cover the system level as a full-system simulator would, nor does it have the high fidelity of the hardware-centric simulator. However, the source code is available for anyone to view, modify, and redistribute.

To choose the right simulation tools, developers should consider eight important factors: identifying objectives, understanding simulation needs, understanding users and collaborators, understanding ease of use, identifying the models used in simulation, considering portability and scalability, understanding basic vs. advanced features, and selecting a simulation platform based on budget and resources.

References

- GlobeNewsWire, “Simulation Software Market Size to Surpass USD 40.5 Bn by 2030” 2022, https://www.globenewswire.com/en/news-release/2022/09/23/2521905/0/en/Simulation-Software-Market-Size-to-Surpass-USD-40-5-Bn-by-2030.html