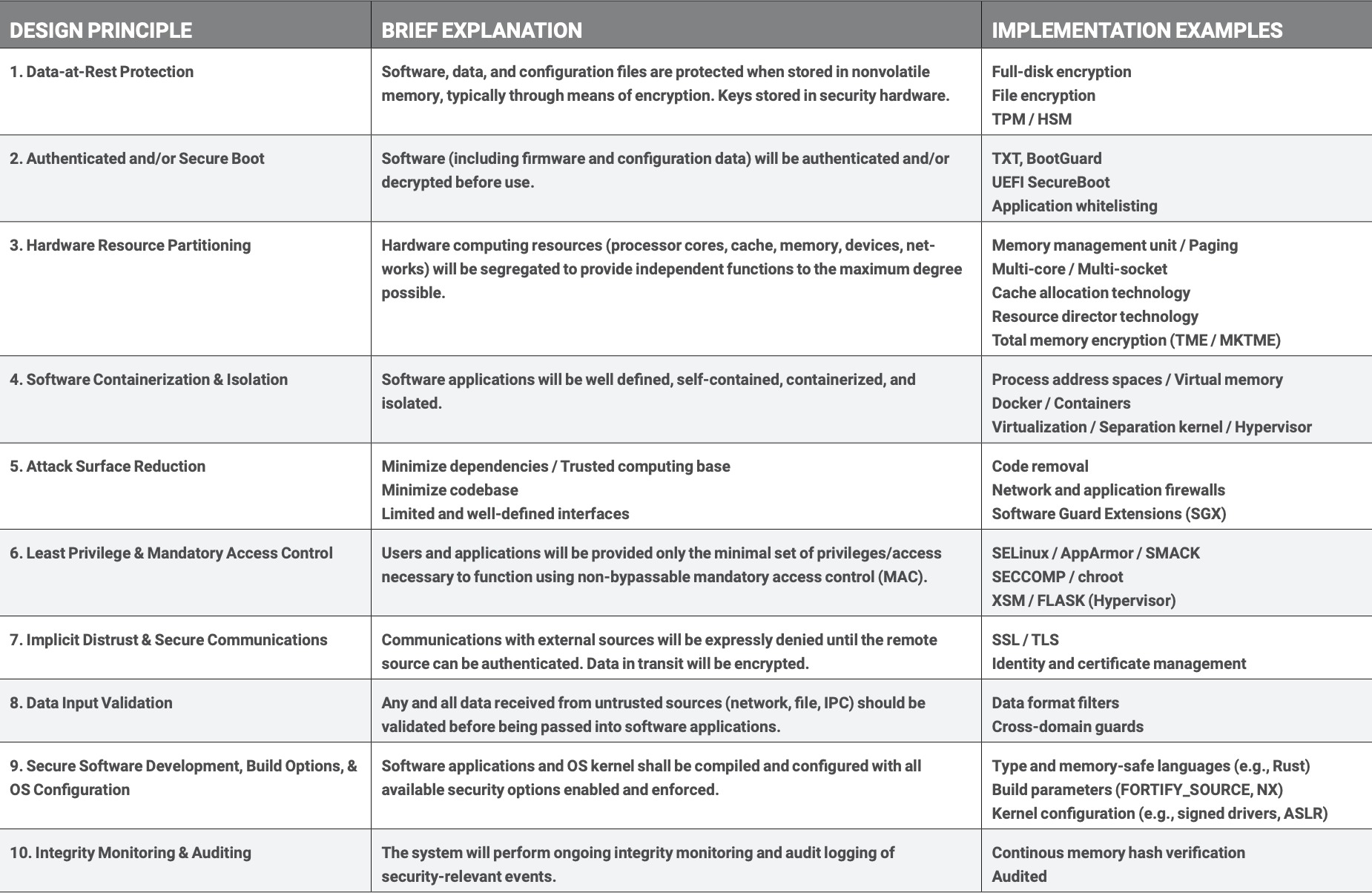

10 Properties of Secure Medical Systems

Protecting Can’t-Fail Embedded Systems from Tampering, Reverse Engineering, and Other Cyberattacks

It’s Not a Fair Fight

When attacking an intelligent edge medical system, it takes only one vulnerability to put patient health at risk.

Security requirements for medical software present a growing challenge as devices move from stand-alone systems or private networks into cloud operations. Intelligent systems offer rewards, but they also introduce risks. Among these risks are the increasing efforts of outside actors to exploit medical devices as entry points for ransomware and other attacks. Worse yet, an attacker may try to use a compromised device to go further, pivoting from one exploited subsystem to another and jeopardizing patient health while causing further damage to the device company’s network, mission, and reputation.

This white paper covers the most important security design principles that, if adhered to, give you a fighting chance against any attacker who seeks to gain unauthorized access, reverse engineer, steal sensitive information, or otherwise tamper with your embedded medical system.

The beauty of these 10 principles is that they can be layered together into a cohesive set of countermeasures that achieve a multiplicative effect, making medical device exploitation significantly more difficult and costly for the attacker.

Data-at-Rest Protection

Secure Boot

Hardware Resource Partitioning

Software Containerization and Isolation

Attack Surface Reduction

Least Privilege and Mandatory Access Control

Implicit Distrust and Secure Communications

Data Input Validation

Secure Development, Build Options, and System Configuration

Integrity Monitoring and Auditing

No One Property to Rule Them All

Contact us if you are interested in learning how these 10 properties can be applied to your use case.

References

- “Connected Medical Device Market: Growth, Trends, COVID-19 Impact, and Forecasts (2021–2026),” Mordor Intelligence, 2021

- Patricia A.H. Williams and Andrew J. Woodward, “Cybersecurity Vulnerabilities in Medical Devices: A Complex Environment and Multifaceted Problem,” PubMed Central, U.S. NIH, 2015

- www.linuxjournal.com/content/take-control-your-pc-uefi-secure-boot

- ruderich.org/simon/notes/secure-boot-with-grub-and-signed-linux-and-initrd

- software.intel.com/content/www/us/en/develop/articles/intel-trusted-execution-technology-intel-txt-enabling-guide.html

- www.starlab.io/titanium-product

- meltdownattack.com

- www.docker.com

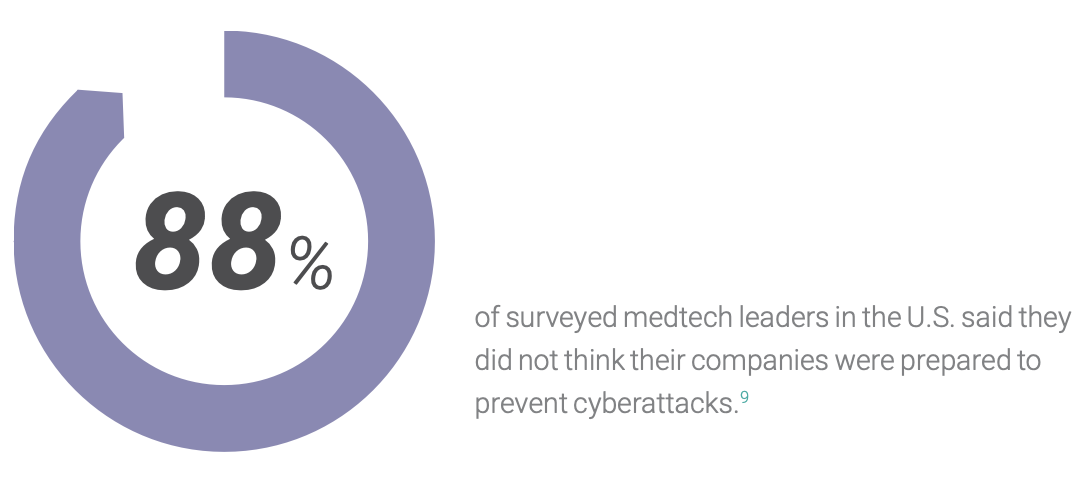

- Maria Fontanazza, “IoMT, Connected Devices Introduce More Cyber Threats into Med Tech and Healthcare Organizations,” MedTech Intelligence, July 12, 2021

- General Principles of Software Validation; Final Guidance for Industry and FDA Staff, U.S. Department of Health and Human Services, Food and Drug Administration, January 2002

- www.websecurity.digicert.com/security-topics/what-is-ssl-tls-https

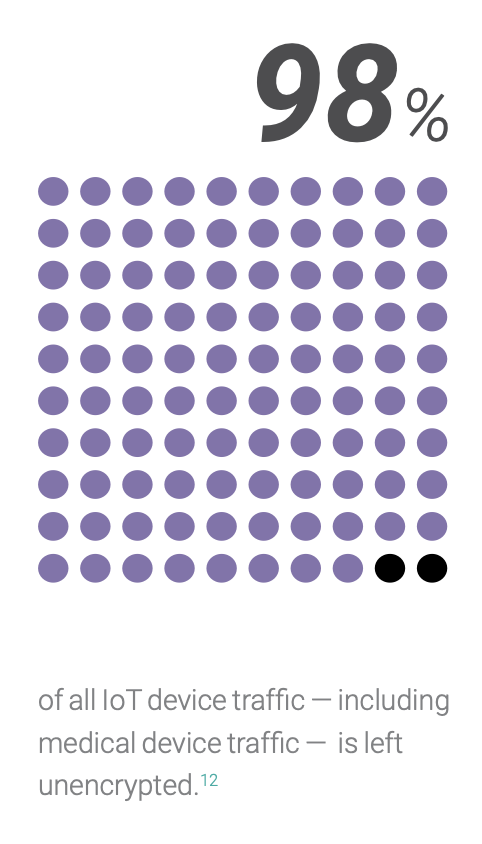

- IoMT, Connected Devices Introduce More Cyber Threats into MedTech and Healthcare Organizations,” MedTech Intelligence, July 12, 2021

- www.imperva.com/learn/application-security/buffer-overflow

- security.stackexchange.com/questions/24444/what-is-the-most-hardened-set-of-options-for-gcc- compiling-c-c

- General Principles of Software Validation; Final Guidance for Industry and FDA Staff, U.S. Department of Health and Human Services, Food and Drug Administration, January 2002