Accelerating the Deployment of Critical Infrastructure Edge Services Through the StarlingX Project

EXECUTIVE SUMMARY

The StarlingX project provides an open source, production grade, distributed Kubernetes for managing edge cloud infrastructure. Originating from proven code that has been widely deployed, StarlingX is a top-level open infrastructure project sponsored by the OpenInfra Foundation. Designed to deliver the reliable uptime, performance, security, and operational simplicity that will be necessary for distributed edge cloud solutions, StarlingX solves the operational problem of deploying and managing distributed networks.

Edge platforms that can provide extremely low latency, high availability, and simplified management will be key to enabling new business opportunities and innovative applications across multiple critical infrastructure market segments. Today, typical edge cloud use cases include multi-access edge computing (MEC), universal customer premises equipment (uCPE), virtual customer premises equipment (vCPE), virtualized radio access network (vRAN), and the Industrial Internet of Things (IIoT). As the industry moves beyond these traditional functions, StarlingX will also be used to power new business opportunities such as transportation, augmented reality, telemedicine, and advanced drones.

For organizations that require full lifecycle support and services around an open source solution, Wind River® Studio Cloud Platform is a commercially supported version of StarlingX. With Cloud Platform, Wind River provides value-added services to ensure our customer’s success using the most advanced open-source edge cloud solution available.

NEW BUSINESS OPPORTUNITIES IN EDGE SERVICES

In the telecom market, communications service providers (CSPs) worldwide are increasingly viewing applications hosted at the network edge as compelling business opportunities. Edge applications present opportunities to sell new kinds of services to new kinds of customers, while revenue from traditional broadband and voice services is essentially flat. This trend provides the potential for increased market penetration, as well as improved average revenue per user (ARPU) for those CSPs who can be early to market with attractive offerings.

Some examples of telecom-oriented edge-hosted applications and functions that are generating wide interest are vRAN, Open RAN, uCPE, vCPE, and MEC.

By bringing content and applications to data centers in the radio access network (RAN), MEC allows service providers to introduce new types of services that are unachievable with cloud-hosted architectures because of latency or bandwidth constraints. Specific new business opportunities enabled by MEC include applications such as:

- Small-cell services for stadiums and other high-density locations: By deploying applications hosted at the network edge (i.e., in the stadium itself), stadium owners and service providers can offer a wealth of integrated services that include real-time delivery of personalized content to fans’ devices. In this case, MEC also minimizes backhaul loading because the new traffic is both generated and delivered locally.

- Augmented reality (AR), virtual reality (VR), and tactile Internet applications: These applications are just not viable without superfast response times, local image analytics, and deterministic low-latency communications. Use cases such as remote medical diagnostics and telesurgery will demand millisecond response times, far quicker than those achievable by round-trip communications with a remote cloud data center.

- Vehicle-to-everything (V2X) communication: Whether the use case is vehicle-to-vehicle or vehicle-to-infrastructure, it requires high bandwidth, low latency, guaranteed availability, and robust security — performance requirements that are impossible to meet with a centralized, cloud-hosted compute model.

- Mobile HD video and premium TV with end-to-end quality of experience (QoE): Content providers have learned the hard way that video quality is critical for subscriber retention: Studies show that a one-second rebuffering event during a 10-minute premium service clip causes a 43% drop in user engagement. MEC allows service providers to optimize video content, enabling a superior user experience, as well as smarter utilization of network resources, and ensuring a fast start and smooth delivery.

Applicable to both enterprise and residential scenarios, uCPE and vCPE deployments replace physical hardware appliances traditionally located at the customer premises that provide connectivity, security, and other functions. By deploying generalpurpose compute platforms based on industry-standard servers, either locally at the customer’s premises (uCPE) or in a data center (vCPE), service providers can remotely instantiate, configure, and manage functions that were once deployed on dedicated hardware platforms. This virtualization of CPE functions reduces operational expenses (OPEX) through more efficient utilization of compute resources, through increased agility in the deployment of services, and through the elimination of “truck rolls” required to update and maintain equipment at remote locations. CSPs can also grow topline revenue and increase margins by allowing customers to selfprovision their services, for example by configuring higher-bandwidth connectivity, enhancing security features, or adding options such as high-end video with cloud-based digital video recording.

Other edge-hosted applications and functions bring operational cost reductions as their primary business benefit. RAN virtualization is a good example.

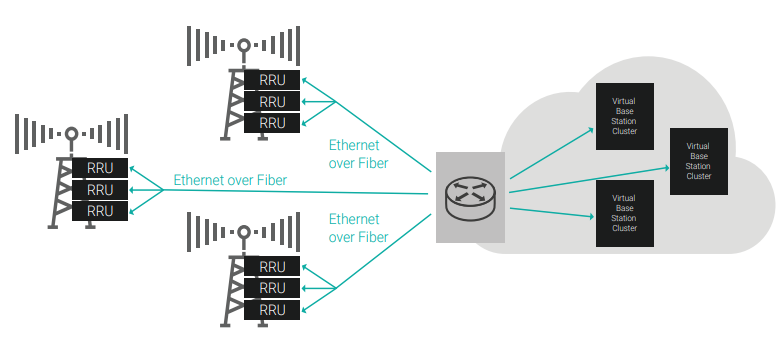

In vRAN architecture, the baseband units (BBUs) are virtualized, rather than being located at the cell site as physical equipment. The virtual BBUs are deployed on software platforms running on industry-standard servers and consolidated in centralized data centers, while the remote radio units (RRUs) remain at the cell sites at the edge of the network. By leveraging standard server hardware that cost-effectively scales processor, memory, and I/O resources based on dynamic changes in demand, vRAN infuses the RAN with capacity for application intelligence, which significantly improves service quality and reliability. With vRAN, service providers can achieve a combination of cost savings, dynamic capacity scaling, better QoE, and rapid instantiation of new services.

In the industrial market, IIoT applications represent new business opportunities for several categories of companies. Use cases such as smart cities, smart buildings, connected cars, robotics, and process control all require the aggregation of large numbers of data streams in an IIoT gateway prior to analytics performed either on premise or in the cloud.

IIoT offers the potential to sell new kinds of services (asset monitoring, analytics, business processes, etc.) to new kinds of customers (manufacturing facilities, car dealers, city governments, hospitals, etc.). Recognizing these new business opportunities, many CSPs have established vertically oriented service delivery teams focused on these opportunities.

Many of these IIoT services are required to be hosted at the network edge, either to enable ultra-low latency connectivity (process control) or to perform on-premise analytics (patient monitoring), or to minimize backhaul traffic (video surveillance). Edge compute solutions are therefore a key requirement as companies exploit business opportunities in IIoT.

Traditional control applications also leverage edge compute solutions as critical infrastructure companies look to slash their operational costs by deploying secure, robust, flexible softwarebased solutions as alternatives to legacy, fixed-function hardware. Control systems installed since the 1980s present major business challenges, such as increasing OPEX due to high maintenance and replacement costs plus a dwindling pool of skilled technicians, limited flexibility resulting from sole-sourced solutions with proprietary programming models, and outdated box-level security features with no provision for end-to-end threat protection or dynamic updates.

These challenges can be addressed by OpenStack-based virtualization solutions. As in the case of new IIoT applications, many of these traditional control services require edge solutions in order to guarantee ultra-low latency response times or to perform on-premise compute functions.

CHALLENGES FOR EFFECTIVE DEPLOYMENT OF EDGE SERVICES

Companies planning the deployment of edge services must select solutions that address key technical and business challenges:

- Many edge applications require ultra-low latency communications with the devices that they serve, recognizing that the “device” could be a smartphone, a tablet, a vehicle, an industrial controller, a set of virtual reality glasses, a TV, or any of a wide range of end points that are emerging as new services are planned and deployed.

- The type of edge applications mentioned above typically need to be deployed on low-cost, low-power servers that are priced appropriately for small branch offices and other remote locations.

- To avoid costly truck rolls and service calls, the infrastructure software platforms need to support automatic installation, provisioning, and maintenance, while communicating with a centralized orchestrator.

- Edge computing applications are often installed by the end users themselves, in unattended, open environments away from cloud data centers, central offices, or points of presence (PoPs). These factors present unique security risks that are greater than in the case of services hosted in the tightly controlled environment of the core network.

- Some of these use cases are targeted at consumers who may have been conditioned to expect less-than-perfect service availability. Many, however, represent either enterprise or industrial business opportunities where guaranteed uptime is a hard requirement and there are significant financial impacts associated with any uplanned downtime, even during maintenance or update operations. Service continuity and service-level agreements (SLAs) are critical factors as service providers develop their strategies for deploying edge applications.

- To avoid any risk of vendor lock-in and to maximize their flexibility in vendor selection, critical infrastructure companies have developed a strong preference for edge infrastructure solutions that are based on open source software and that have been proven to be fully compatible with open industry standards.

- Recognizing that no single open source project existed to address all these challenges, on May 21, 2018, the StarlingX project was initiated. StarlingX comprises services that enable highly reliable applications and services to be deployed at the network edge.

- StarlingX plays a key role in several of the key areas of focus for the OpenInfra Foundation, which include:

- Edge computing

- Container infrastructure

- Public/private hybrid cloud

- AI and machine learning

- CI/CD

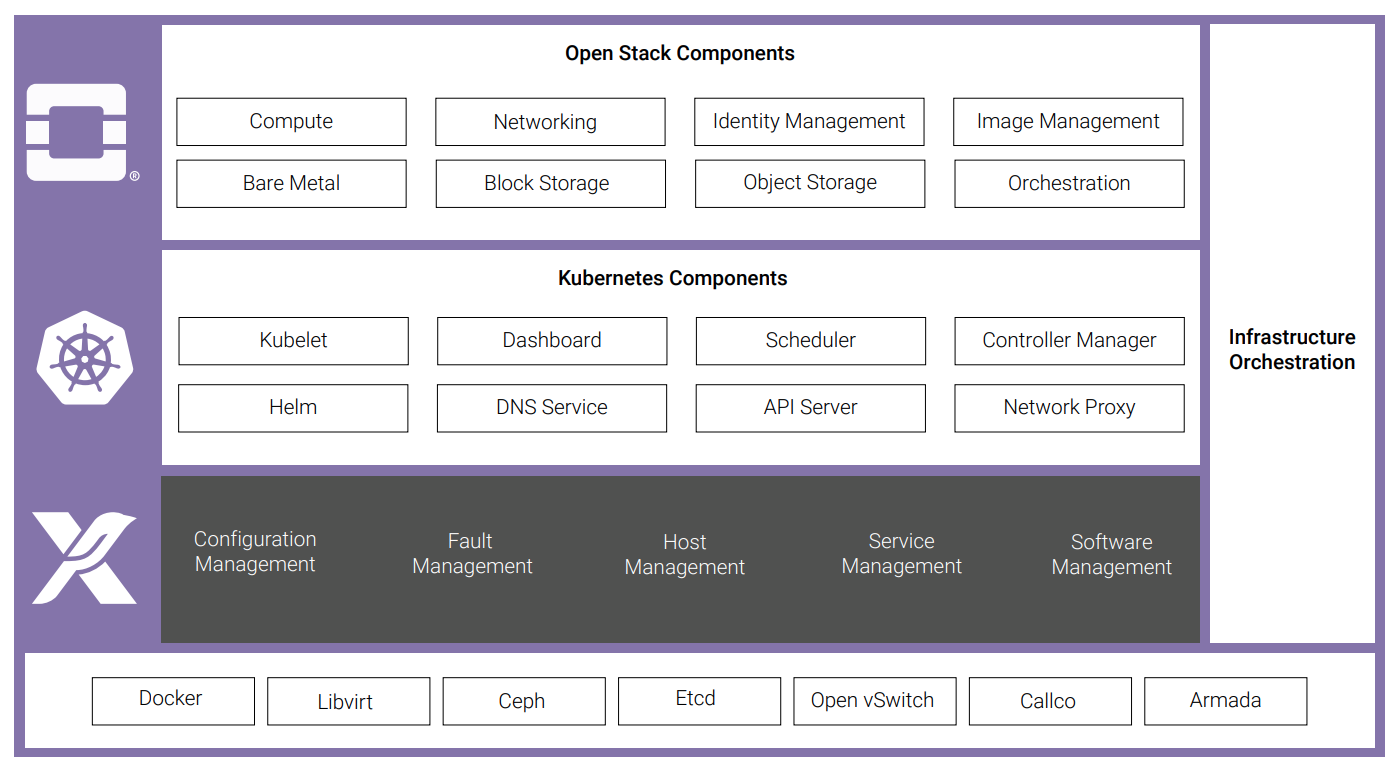

- As an OpenInfra project, edge deployments based on StarlingX automatically and seamlessly leverage updates to the mainline OpenStack code base. StarlingX adds the following new services:

- Configuration management: Managing installation, nodal configuration, and inventory discovery.

- Host management: Providing full lifecycle management of the host; detects and automatically handles host failures and initiates recovery as well as providing extensive monitoring and alarms.

- Service management: Ensuring high availability through redundancy and active or passive monitoring of services.

- Software management: Automated deployment of software updates for security and/or new functionality; in-service and reboot required patches supported.

- Fault management: Providing a framework for infrastructure services via API.

- To support low-latency edge applications and functions such as MEC, uCPE, vCPE, vRAN, and IIoT, StarlingX delivers to containers and guest VMs an ultra-low average interrupt latency of 3 µs, leveraging a low-latency configuration of the integrated KVM hypervisor.

- StarlingX helps critical infrastructure companies minimize their operations costs, typically saving millions of dollars in installation, commissioning, and maintenance compared to roll-your-own (RYO) solutions. While the platform is delivered as a single, preintegrated image installed with no manual intervention, the intelligent orchestrated patching engine allows up to hundreds of nodes to be upgraded quickly and minimizes the length of maintenance windows.

The unique security risks of edge applications are addressed in StarlingX by a comprehensive set of end-to-end security features. These include Unified Extensible Firmware Interface (UEFI) secure boot, cryptographically signed images for host protection, virtual Trusted Platform Module (TPM) device for highest-security VM deployments, secure API access and control, and secure keyring database for storage of encrypted passwords. Collectively, these features and others ensure that edge applications running on StarlingX are protected against threats, wherever they originate.

WIND RIVER AND STARLINGX

Wind River is a key contributor to the StarlingX project. For more than 35 years, Wind River has served customers in markets with the highest standards for reliability, security, and performance. This makes Wind River uniquely positioned to play an active role in helping to shape the edge infrastructure of the future. It also means we are well equipped to help our customers with commercial deployments of StarlingX.

Cloud Platform is based on StarlingX. By using Cloud Platform for a commercial deployment, Wind River ensures that our customers not only get the most advanced, open-source distributed edge cloud platform but also that they receive value-added service in the following areas:

A Ruggedized Product That Meets the Customer’s Specific Requirements

Wind River will conduct a complete carrier grade test to uncover and address issues that may be a problem in a production environment. Along with this testing, we will also configure the platform for optimal performance and test it on a representative set of platforms specific to our customer’s needs.

Lifecycle Management and Long-Term Support

Open source community support may be too limited to cover the lifecycle of a commercial deployment. Wind River will support the product over two to three years and will add extended support as needed for a longer term. This support includes:

- In-service upgrades: The community supports upgrading one version. Wind River makes it easy to upgrade multiple versions with comprehensive testing to ensure that everything goes smoothly.

- Security updates: Monitoring and updates ensure that whatever supported version the customer is on remains secure.

- New feature updates: If a customer needs a new feature, they can be backported from current versions of StarlingX to the customer’s version as needed.

- Bug fixes: Fixes are prioritized in the current version of StarlingX.

Building Customer-Specific Capabilities

As an active community member, Wind River can advocate for the customer to introduce new feature requests upstream within the current version of StarlingX. If a customer wants a unique feature specific to them, we can build and support a custom feature exclusively for them. Once the customer determines that a feature no longer needs to be exclusive, Wind River can submit the feature for addition to StarlingX for the benefit of the community.

CONCLUSION

StarlingX is the most advanced open source edge infrastructure available. The project includes functionality that has been proven to solve critical challenges associated with the reliability, performance, security, deployment, and lifetime operation of edge cloud applications. Wind River is committed to supporting the success and evolution of StarlingX to enable high-value edge use cases, now and in the future.

For current edge cloud applications, StarlingX will enable critical infrastructure companies to boost their top-line revenue while minimizing their operational costs. Looking ahead over the next few years as the industry moves beyond traditional telecom functions, companies will leverage StarlingX to capitalize on new business opportunities enabled by upcoming technologies, such as connected vehicles, augmented reality, telemedicine, and advanced drones. Edge deployments based on the components in StarlingX automatically and seamlessly leverage updates to the mainline OpenStack code base.

Wind River is focused on accelerating massive innovation and disruption at the network edge through this important industry initiative. As a commercial deployment of StarlingX, Cloud Platform will be key to enabling new business opportunities and innovative applications across multiple market segments.