Aerospace Tech Week Europe 2024: The flight path for AI

Last week, I attended the Aerospace Tech Week Europe 2024 conference, which included many talks and several panel discussions on aerospace industry challenges, regulations and technology trends. It was interesting to note the recurring high-level themes from previous years, such as sustainability, connectivity and cybersecurity, but also those where there was a marked shift in the discussion.

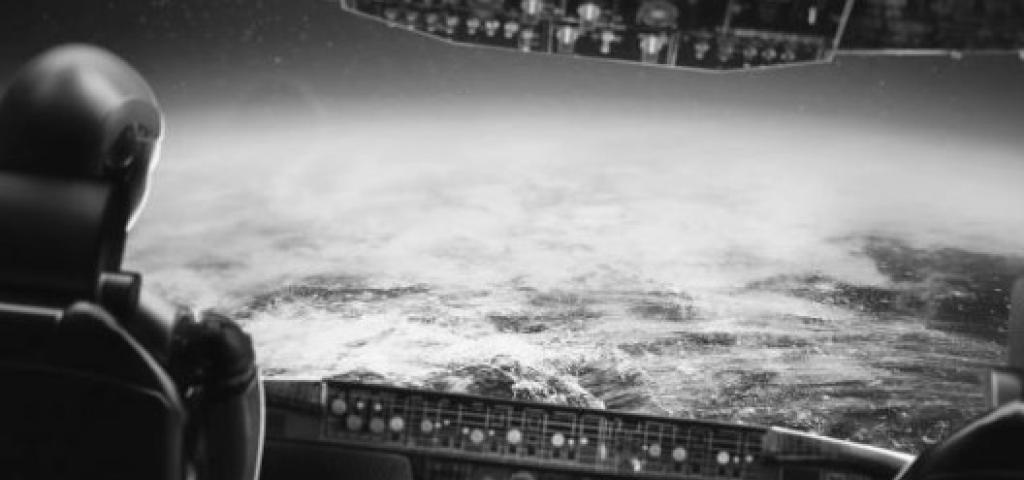

In 2023, there had been a lot of discussion around artificial intelligence (AI) and machine learning (ML) in multiple contexts, ranging from performing data analysis in the cloud for predictive maintenance, but also potential use of AI in the cockpit and eventually in AI pilot systems (which I discussed in a blog post "Would you trust an AI pilot?").

Fast forward to 2024, and the conference discussions about AI had moved on from discussing potential use cases, to reporting progress in the development of standards for the development of AI in aerospace applications and route to certification and deployment.

Anna von Groote, Director General of EUROCAE (European Organisation for Civil Aviation Equipment) gave an informative and insightful presentation on the collaboration undertaken with SAE International, and EASA (European Union Aviation Safety Agency). This involved multiple working groups formed from across the aerospace sector which had undertaken the definition of statement of concerns, needs, use cases, and processes for undertaking development and certifications/approval. These have received input and feedback from around 600 individuals across the sector, and EUROCAE plans to publish AI standards in 2024.

Guillaume Soudain, Programme Manager at EASA gave an update on the EASA AI Roadmap, highlighting the availability, earlier in March 2024, of the Concept Paper Issue 2, which contains a first set of objectives that should trigger the first AI certifications as early as 2024 for Level “Assistance to Human” Systems.

Although the initial AI use cases may not involve an OS, it is interesting to consider that the planned avionics safety certification of AI could potentially converge in the future with the avionics safety certification of Linux, which is being investigated by the ELISA Project (as discussed in a conference presentation by my Wind River colleague Olivier Charrier). If these are both successful, it could be very significant, given that many AI-based systems involve an application running on Linux in conjunction with AI on a GPU.

Dr. Thomas Krüger of DLR (German Aerospace Center) gave an interesting talk on development challenges and processes for AI applications for avionics. This included challenges of incorporating AI into model-based design (MBD) architectures, designing learning algorithms with increased explainability and robustness, resilience and failure response capabilities and certification. His talk highlighted the issue of unclear requirements and also how it was difficult to state ethical questions as requirements and apply it to the use case. DLR's conclusions were that in order for AI to be used in safety-critical aerospace applications, it must also be secure against attacks and misuse. In addition, in DLR's view, the use of AI will also increase system complexity which will also have an impact on safety certification costs.

In my view, these conclusions raise some interesting questions about the benefits of AI in aerospace applications versus the expected increase in safety certification costs. Will affordability become an issue, and will this limit the scope of deployment of AI applications in aerospace, at least initially?

If you want to share your views, you can reach me on LinkedIn.

About the author

Paul Parkinson is a Field Engineering Director for Aerospace & Defence for EMEA at Wind River