What Is DevSecOps?

What Is DevSecOps?

DevSecOps is a development approach that addresses today’s increasing security concerns within the development cycle. Cybersecurity is a never-ending race to manage risk to organizations, applications, data, and operations. Security organizations face pressure from two forces:

- The speed at which businesses must innovate, build, and release solutions has increased exponentially as market pressures have driven organizations to adopt DevOps methodologies that streamline their entire development and release cycles.

- The sophistication and volume of cyberthreats has risen dramatically, with devastating consequences from data breaches, ransomware, countless strains of malware, and other threats.

Security teams are rethinking their traditional risk management approaches and creating dynamic, automated ways of integrating security testing and validation into the product lifecycle. DevSecOps has emerged as a fundamental strategy.

How Is It Different from DevOps?

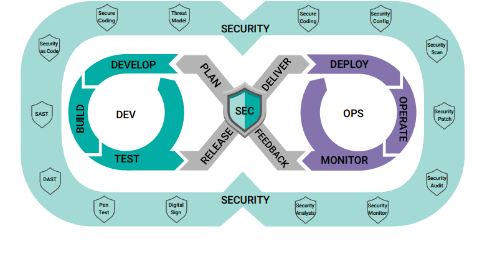

The DevSecOps process evolved from DevOps, which combined software development and operations into a unified process with a cyclical flow, automating tasks and bringing consistency and structure to code development. When it became apparent that security concerns could not be addressed as add-ons at later stages, the DevSecOps approach emerged, incorporating security from the earliest planning stage and carrying it through post-deployment.

The Changing Security Landscape

The security landscape is constantly changing, shaped by several factors:

- Sophistication of threats: The days of easily identified malware signatures are long gone. Malware is now homomorphic or even encrypted altogether, enabling it to proliferate undetected across devices. Unmonitored hardware devices that are internet-enabled allow access points and lateral movement. Sophisticated threat actors have had access to considerable money to develop highly damaging yet practically undetectable attacks.

- Volume of threats: Cyberattacks are big business. Countless individuals and organizations, including nation states, have incentive to launch ransomware, steal identities, and breach data stores. Internet-connected devices are under constant siege by automated tools that scan for known vulnerabilities.

- Scarcity of cybersecurity resources: People with the full skill sets for cybersecurity and security engineering are comparatively rare. Organizations often struggle to retain staff and talent to adequately protect their resources. Developers and engineering teams in particular need to understand the implications of security and be empowered to design it into their products.

- DevOps methodologies: As the product lifecycle has shifted left to embrace DevOps, so have security efforts. Just a few years ago it was possible to run a product through security validation and then reengineer components as necessary. Now, both overarching security needs and the automation-first approach to product release require integrated security validation.

DevSecOps in the Embedded Systems World

Security teams face several challenges as they set up security engineering and DevSecOps integrations in their organizations, including changing team culture, avoiding hardware dependencies, and learning new toolsets and security development skills.

Culture

Traditional security efforts usually look more like audits than engineering efforts. Security teams have long used checklists and hard requirements to evaluate products against security standards. While this approach does give developers direction for security by design, it is difficult to retain staff with the required hardware and embedded systems know-how.

An even bigger challenge is posed by the need to use automation to evaluate the security of embedded systems. This requires tool development, DevOps expertise, and, often, the ability to develop code. Team members need to be trained in the latest capabilities, and some may need additional programming expertise to keep up with their DevOps counterparts. When security teams can make this shift, however, the results are powerful.

The transition to DevSecOps practices can be initially challenging but ultimately powerful for teams.

Hardware Dependency

Most hardware vendors implement security capabilities that should be leveraged by developers and tested by security teams, but they are often proprietary and unique to each platform. Secure Boot, for example, exists in many different implementations, such as Intel® TXT/tboot and U-Boot. It is challenging to create automation that handles their differences.

Security Patterns

As security becomes foundational to embedded systems development, crucial best practices include developing chain-of-trust boot loading that uses signature validation and signed certificates, disabling JTAG in production of embedded systems to eliminate backdoors, securing data storage, and protecting certificates and encryption keys to prevent image and connectivity spoofing.

Fully on-premises, cloud-based, and hybrid configurations must all be supported by DevSecOps. Specifications and best practices related to a DevSecOps environment include:

- DoD Enterprise DevSecOps Reference Design

- DoD Cloud Computing Security Requirements Guide

- DoD Container Hardening Guide

- DoD/DISA Container Image Creation and Deployment Guide

- Guidelines for the Selection, Configuration, and Use of Transport Layer Security (TLS) Implementations (NIST 800-52)

- Protecting Controlled Unclassified Information in Nonfederal Systems and Organizations (NIST 800-171)

- Zero Trust Architecture (NIST 800-207)

- Application Container Security Guide (NIST 800-190)

- Guide to Computer Security Log Management (NIST 800-92)

- Privileged Account Management for the Financial Services Sector (NIST 1800-18A)

- Security and Privacy Controls for Information Systems and Organizations (NIST 800-53)

- “Use of Public Standards for Secure Information Sharing,” Committee on National Security Systems Policy, CNSSP 15

- Digital Signature Standard (FIPS 186-4)

How Can Wind River Help?

The Wind River DevSecOps Environment

Wind River® has more experience in agile development in the embedded systems world than any other organization. We pioneered the process for the development of our own products. Our Professional Services team can help your organization take the leap to DevSecOps with best practices for making the most effective use of our cutting-edge development tools.

Security by Design

Strong security begins before a single line of code is written. It starts with design, ensuring that best-practice security principles are being implemented as early as possible. This is especially important for automated security in a DevSecOps world, because these security principles will inform the automation and vulnerability measurements that should be implemented by CI/CD pipelines.

Wind River has partnered with many hardware vendors, including Intel, NXP, and Xilinx/AMD, to enable developers to take advantage of security capabilities and best practices.

A variety of Wind River products support teams embracing DevSecOps practices.

Security Through Design

All too often, security is considered, at best, a phase of the product lifecycle. Perhaps strong security requirements were established early but are not evaluated until late in the development process. Or a security audit is performed just before release, at which point real-time fixes are prohibitively expensive and are scheduled for a future release.

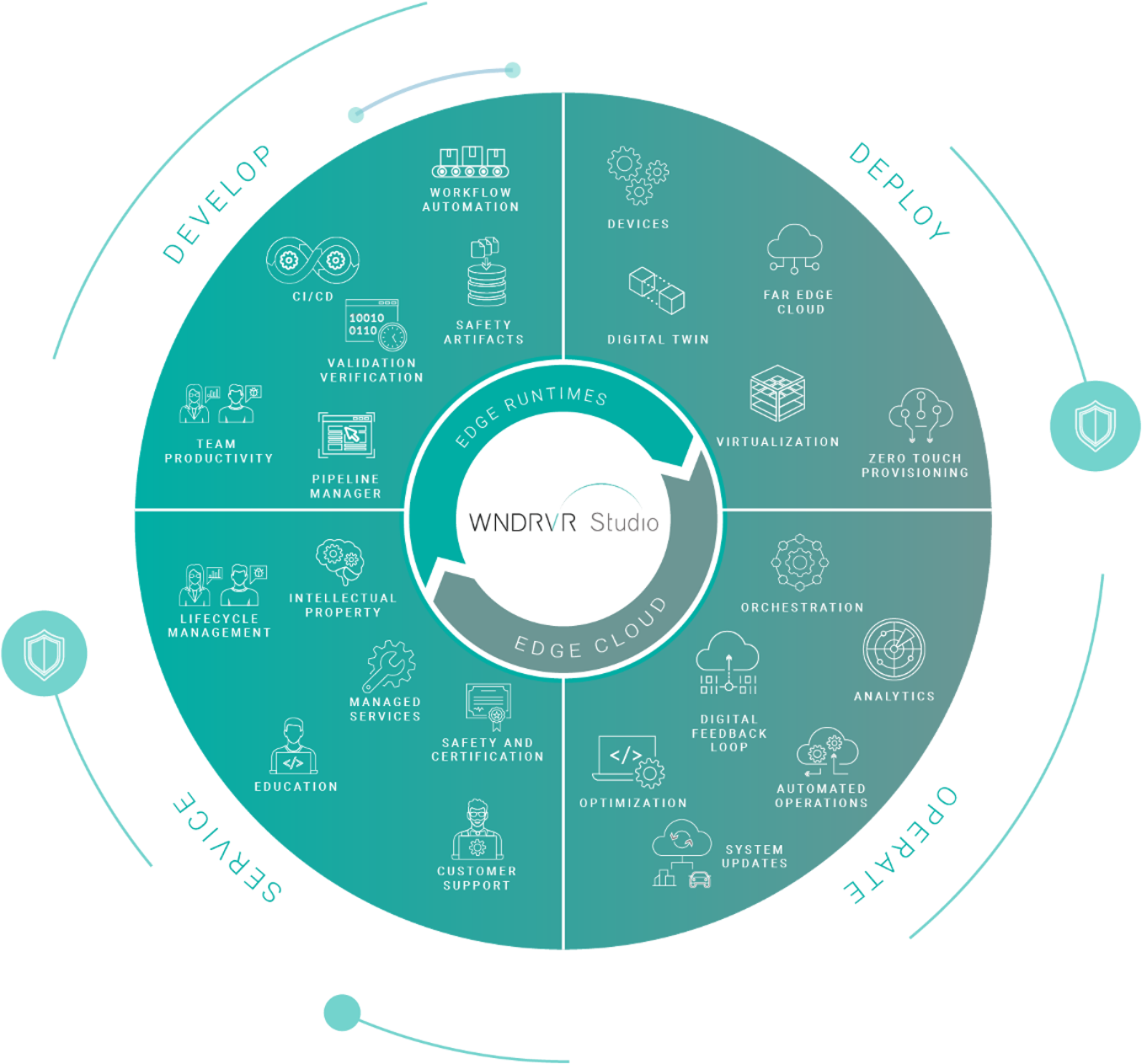

The promise of DevSecOps is to measure security throughout the design-and-release cycle. By leveraging platforms such as Wind River Studio, teams can effect this promise. Studio provides a holistic platform for development, deployment, operations, and servicing edge systems. Once systems are managed in this way, security automation across the lifecycle becomes possible.

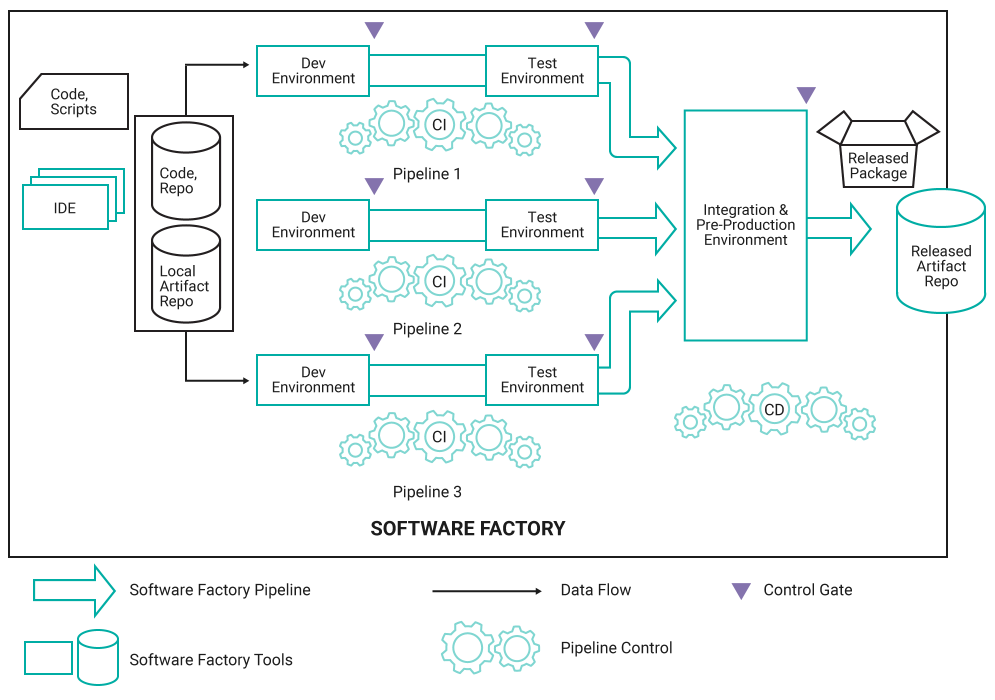

A typical DevSecOps environment is represented in Figure 2:

This diagram highlights several assets of the DevSecOps environment that need to be secured, including:

- The repositories

- Code, local artifact, and released artifact repositories

- The software components

- IDE, repos, development, and test components

- The build tools themselves:

- Compilers and linkers

- The connectivity between the components

- The configuration of each component

- The storage elements of the components, for both on-premises and cloud-based environments

- The event logs of all components

One asset not shown is that of the hardware security module (HSM). The HSM is a physical computing device that safeguards and manages digital keys and performs encryption and decryption functions for digital signatures, strong authentication, and other cryptographic functions.

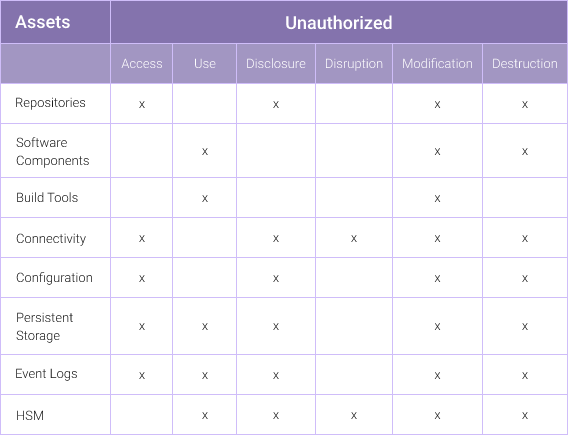

To determine the vulnerabilities, we can start with a definition: “The term information security means protecting information and information systems from unauthorized access, use, disclosure, disruption, modification, or destruction….” These unauthorized events can be attacks on the DevSecOps system.

Figure 3 identifies the assets that are vulnerable during each unauthorized event. This list is foundational for determining the security implementations required to protect each asset.

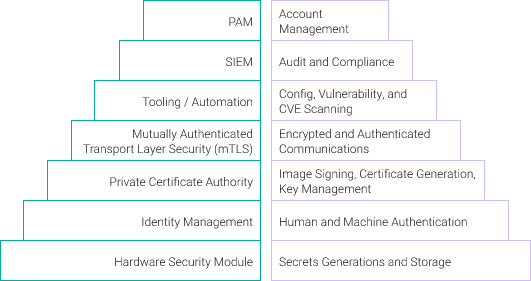

Multiple technologies are brought together to secure a DevSecOps environment. The capabilities of each are shown in Figure 4.

Security and Compliance

Wind River offers automated security and compliance scanning designed especially for complex, embedded software systems, to help development teams identify and prioritize high-risk vulnerabilities. This scanning service uses the Common Vulnerabilities and Exposures (CVEs) database to identify risks and vulnerabilities in applications and open source packages.

Wind River provides expert guidance on CVE mitigation, including necessary recommendations for backporting, validation, and testing patches before applying them. This ensures that your application is remaining current in terms of security requirements — and is doing so with stability and continuity.

DevSecOps with Wind River Products

Wind River Studio

Studio delivers a complete lifecycle management platform that enables development teams to accelerate building, testing, and deploying on the edge. Studio supports full cloud-native platforms as well as end-to-end visibility of development states across CI/CD workflows. By empowering DevOps teams to achieve high levels of automation, Studio creates real opportunities for organizations to implement security automation. Studio supports automation triggering and digital feedback loops that not only raise the bar for development automation but support security integrations as well.

Studio also enables development and security teams to collaborate through a single-pane-of-glass interface, ensuring that security validation is never lost along the product development lifecycle. Artifacts can be captured within Studio and preserved for archival purposes and reuse.

» Learn More

RTOS

DevSecOps automation relies on adequate tooling. The Studio industry-leading real-time operating system (RTOS), powered by VxWorks®, offers a platform for instrumentation, along with native support for third-party security tools. VxWorks supports DevOps pipeline development and CI/CD models through Studio.

VxWorks also provides built-in security capabilities such as cryptographic services and access controls that can be evaluated through automation. This ensures that developers are using these security capabilities to their fullest.

» Learn MoreLinux OS

The Studio Linux operating system, powered by Wind River Linux, offers DevSecOps engineers the power of open source and a common Linux platform to implement security automation. It supports application containerization and isolation, enabling security teams to create security validation on a more granular level. It also provides strong access controls and separation of duties that can be measured through automated assessment.

Like VxWorks, Wind River Linux supports DevOps pipeline development and CI/CD models through Studio. By developing on an end-to-end platform, DevSecOps teams can perform integrity monitoring across the product lifecycle, from prototype to production.

» Learn MoreSimulation

Hardware dependencies, as noted above, can be a challenge to teams that want to implement DevSecOps practices. The Studio full-system simulator, powered by Wind River Simics®, eliminates this dependency. Simics can replicate the functionality of many kinds of hardware and operating systems, allowing security teams to develop automated security testing and validation more easily.

For example, teams using Simics can show how a piece of software will respond to different types of security threats. Once your developers have created a model of a system in Simics, they can simulate many different security scenarios, such as data breaches or malware attacks. Development teams don’t have to spend time and expenses in setting up physical development labs, and security teams get an advance look at how the hardware deployment will react under threat. The result is higher-quality code that’s easier to protect, because it’s already been tested under many different scenarios.

» Learn MoreDevSecOps FAQ

What Is Virtualization | Wind River

What Is Virtualization?

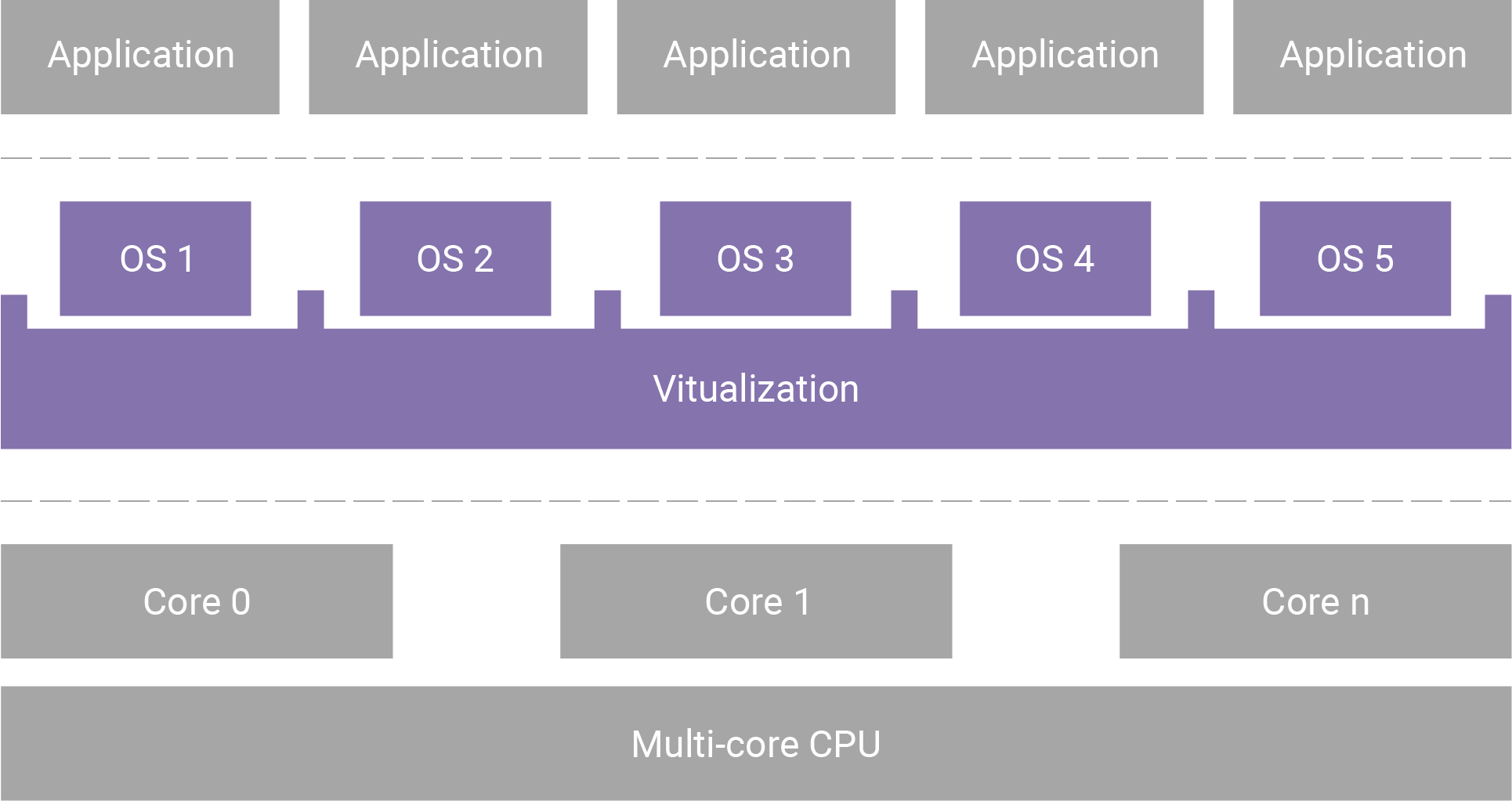

Virtualization in systems development is the creation of software that simulates hardware functionality. The result is a virtual system that is more economical and flexible than total reliance on hardware systems.

Virtualization is enabled by hypervisors that communicate between the virtual and physical systems and allow the creation of multiple virtual machines (VMs) on a single piece of hardware. Each VM emulates an actual computer and can therefore execute a different operating system. In other words, one computer or server can host multiple operating systems.

Because of its versatility and efficiency, virtualization has gained significant momentum in recent years. What’s more, many of today’s industrial control systems were installed 30 years ago and are now outdated. While that legacy infrastructure provided a stable platform for control systems for many years, it lacks flexibility, requires costly manual maintenance, and does not allow valuable system data to be easily accessed and analyzed.

Versatile and efficient, virtualization overcomes the limitations of legacy systems.

Virtualization overcomes the limitations of legacy control systems infrastructure and provides the foundation for the Industrial Internet of Things (IIoT). Control functions that were previously deployed across the network as dedicated hardware appliances can be virtualized and consolidated onto commercial off-the-shelf (COTS) servers. This leverages the most advanced silicon technology and also reduces capital expenditure, lowers operating costs, and maximizes efficiency for a variety of industrial sectors, including energy, healthcare, and manufacturing.

For developers of embedded systems, virtualization brings new capabilities and possibilities. It allows system architects to overcome hardware limitations and use multiple operating systems to design their devices, while bringing high application availability and exceptional scalability.

Changes in Embedded Systems

Drivers of change in embedded systems design include improvements in hardware as well as the continuing evolution of software development methods. At the hardware level, it’s now possible to do more with a single CPU. Rather than host just one application, new, multi-core systems-on-chip (SoCs) can support multiple applications on a single hardware platform — even while maintaining modest power and cost requirements. At the same time, advances in software development techniques are producing systems that are more software-defined and fluid than their predecessors.

The more things change, the more they stay the same. The core requirements for embedded systems are not going away:

- Security: Cyberattacks have become more common, while completely isolated systems are becoming rarer. Embedded engineers are taking security more seriously than ever before.

- Safety: The system must be able to ensure that it does not have an adverse effect on its environment, whatever that might be. Systems in the industrial, transportation, aerospace, automotive, and medical sectors can cause death or environmental disaster if their embedded systems malfunction. Because of this, determinism — predictability and reliability of performance — is of paramount importance. A failure in one zone should not trigger a failure of the entire system.

While there are many changes in the embedded systems world, the core requirements have remained the same: Embedded systems must be secure, safe, reliable, and certifiable.

- Reliability: An embedded system must always perform as expected. It should produce the same outcome, in the same time frame, whether it is activated for the first or the millionth time. “Not enough” or “too late” are not options in systems that cannot fail.

- Certifiability: The certification process is a critical and costly part of development for many embedded systems. Certification in legacy systems must be maintained and leveraged, while ease of certification for future systems must be ensured.

Bridging Embedded and Cloud-Native Technologies

Right now, many manufacturers are facing end-of-life for their legacy embedded systems. These decades-old systems may be insecure, unsafe, or unable to meet current certification requirements. They need to be upgraded or replaced, which is expensive — and sometimes not even possible.

At the same time, the workforce is changing. The engineers who built the original design are retiring, and the new workforce has been schooled in different approaches.

Arguably, an even bigger problem is posed by requirements for shortening development cycles. While it may once have been viable to take a year or more to create a fixed-function embedded system on a distinct piece of hardware, today’s economy demands a quicker time-to-market.

Nonetheless, many legacy embedded systems are here for the long term — 35–45 years is not an uncommon lifecycle for many industrial systems. They may not be modern, but the machines they run were built to last. Industrial control systems, for example, could have multi-decade lives, even if their digital components are hopelessly out of date.

Advantages of Virtualization in Embedded Systems

Fortunately, advances in hardware and virtualization have occurred even as challenges have beset the world of embedded systems. It is now possible to overcome most of the difficulties inherent in having separate, purpose-built embedded systems running on separate proprietary hardware. This is achieved by consolidating each separate embedded system, with its application and operating system, into its own virtual machine, all on a single platform and hardware architecture.

As depicted in Figure 1, virtualization can place multiple embedded systems, each running its own OS, on one multi-core silicon hardware system. Advances in silicon design, processing power, and virtualization technology make this possible. The same silicon can host more than one version of Linux along with multiple RTOSes and other common legacy OSes.

Figure 1. Reference architecture for multiple embedded systems running on a single processor using virtualization

Virtualization succeeds in abstracting the embedded system application and its OS from the underlying hardware. As a result, it becomes possible to overcome many of the most serious challenges arising from legacy embedded systems. The advantages for engineers include:

- A significant increase in scalability and extensibility

- Support for open frameworks and reuse of IP across devices

- The ability to build solutions on open, standardized hardware that offers more powerful processing capabilities

- Simplification of design and accompanying acceleration of time-to-market

- Application consolidation within the device, which reduces the hardware footprint and costs related to the bill of materials (BOM)

- A gradual learning curve, given a familiar OS and programming languages that can be deployed in the virtualized system

- The ability to run multiple OSes and applications side by side

- Isolation of each operating system and application instance, providing additional security and allowing both safety-certified operating environments and “unsafe” applications

- Easier upgrades via new methodologies such as DevOps, which simplifies the quick extension of new features

- Faster response to security threats

How Can Wind River Help?

Wind River Studio

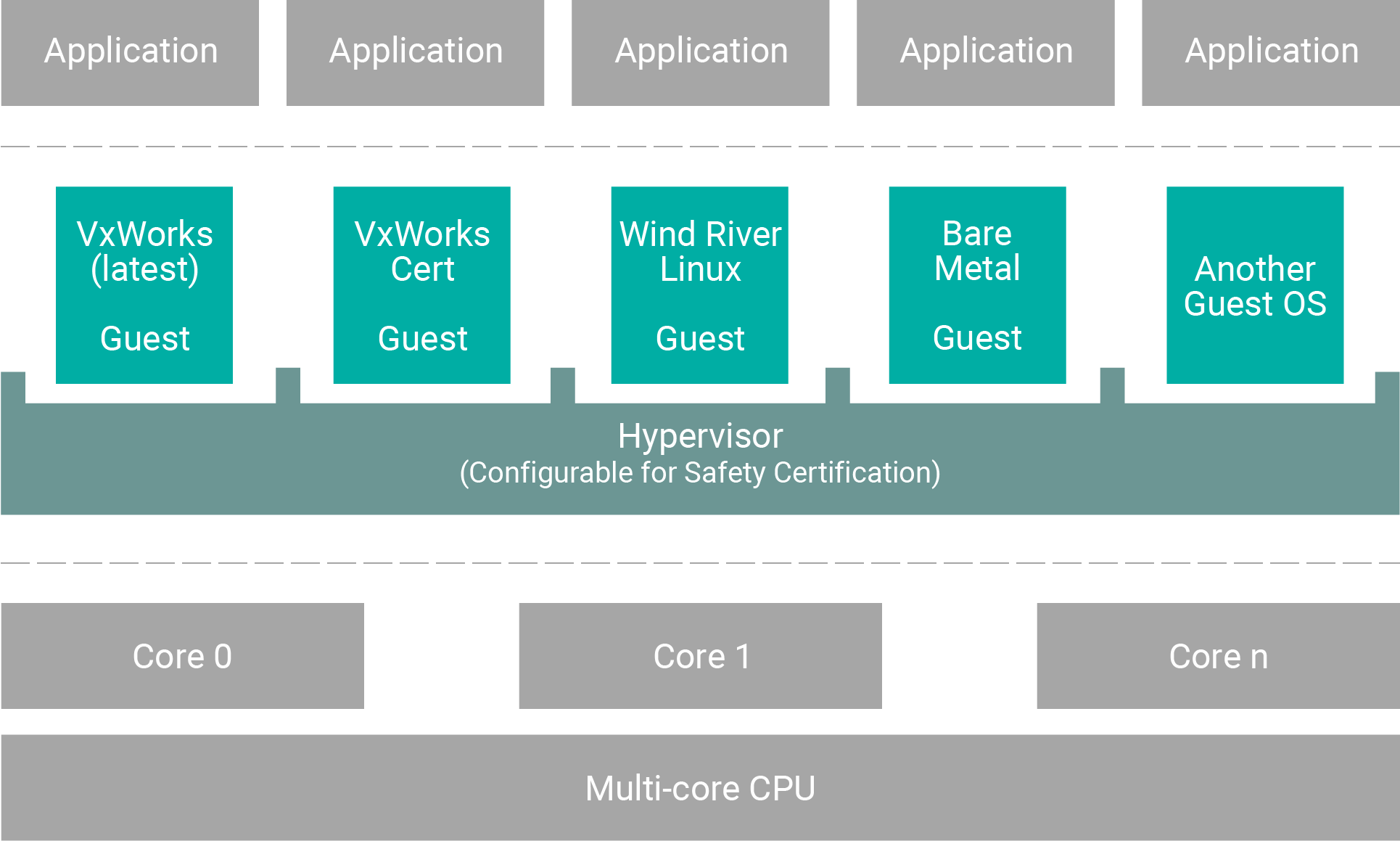

Wind River® Studio is the first cloud-native platform for the development, deployment, operations, and servicing of mission-critical intelligent edge systems that require security, safety, and reliability. It is architected to deliver digital scale across the full lifecycle through a single pane of glass to accelerate transformative business outcomes. Studio includes a virtualization platform that hosts multiple operating systems, as well as simulation and digital twin capabilities for the development and deployment process.

» Learn More

Figure 2. Helix Platform architecture

Virtualized Os Platform

Studio includes a virtualization solution powered by Wind River Helix™ Virtualization Platform, a safety certifiable, multi-core, multi-tenant platform for mixed levels of criticality. It consolidates multi-OS and mixed-criticality applications onto a single edge compute software platform, simplifying, securing, and future-proofing designs in the aerospace, defense, industrial, automotive, and medical markets. It delivers a proven, trusted environment that enables adoption of new software practices with a solid yet flexible foundation of known and reliable technologies on which the latest innovations can be built.

As part of Studio, Helix Platform enables intellectual property and security separation between the platform supplier, the application supplier, and the system integrator. This separation provides a framework for multiple suppliers to deliver components to a safety-critical platform.

The platform provides various options for critical infrastructure development needs, from highly dynamic environments without certification requirements to highly regulated, static applications such as avionics and industrial. It is also designed for systems requiring the mixing of safety-certified applications with noncertified ones, such as automotive. Helix Platform gives you flexibility of choice for your requirements today and adaptability for your requirements in the future.

» Learn MoreSimulation/Digital Twin

Studio cloud-native simulation platforms, allow you to create simulated digital twins of highly complex real-world systems for automated testing and debugging of complex problems. Digital twins allow teams to move faster and improve quality, easily bringing agile and DevOps software practices to embedded development.

» Learn More About Studio Digital Twin SolutionsFrequently Asked Questions

The Cloud-Connected Future: An Interview with Nicolas Chaillan

The Cloud-Connected Future: An Interview with Nicolas Chaillan

Nicolas Chaillan, the first chief software officer for the U.S. Air Force and Space Force, breaks down how U.S. A&D learned about agility, rapid prototyping, and innovation in the software-defined world. Chaillan, who brought DevSecOps to the DOD, explains a framework for cybersecurity, machine learning, and AI at the device level.

Chaillan is a technology entrepreneur, software developer, cyber expert, and inventor. He has nearly two decades of domestic and international experience in cybersecurity, software development, product innovation, governance, risk management, and compliance. Join his discussion with Wind River® Senior Director for Product Management Michel Chabroux about best practices and real-world examples. View the entire session above, or pick and choose the sections you’re most interested in below.

Sample Highlights

Customer Success - Clarion

Wind River Helps Clarion Deliver Innovation Fast

Clarion Malaysia leveraged Android for its next-gen IVI system.

Customer Success Story

The Objective

Clarion Malaysia needed to integrate its in-vehicle infotainment (IVI) system with the Internet of Things for a rich in-car experience that was safe, simple, and controlled. It needed to build its customized AX1 solution on the Android platform and make it work seamlessly with all the devices and services consumers expect today.

“To get to market quickly and control our costs, we needed a strong partner with expertise in the automotive industry and with Android. We knew we could not do this project ourselves.”

—T.K. Tan, Managing Director, Clarion Malaysia

How Wind River Helped

Wind River® provided the software integration and project management skills that helped Clarion create a ground-breaking solution that combines an A/V navigation system, a streaming entertainment platform, and Internet access on a customized Android 2.3.7 platform. The Wind River Automotive Solutions team helped anticipate, isolate, and correct problems before they impacted the project.

The Results

Working closely with the Wind River Automotive Solutions team, Clarion was able to deliver the innovative, multi-featured AX1 in-vehicle entertainment platform on time and on budget. In addition to providing GPS navigation, hands-free calling, Micro SD, and USB ports, the AX1 enables users to buy, download, and sync music from an online store.

Resources

Customer Success Story

Clarion Malaysia Delivers Revolutionary In-Vechicle Infotainment System with Wind River

Read More